Search–Solve–Prove: building a place for thoughts to develop

Nov 2, 2025

🌌 Summary

What if you could see an AI think not just the final answer, but the whole stream of reasoning: every search, every dead end, every moment of insight? We’re building exactly that: a visible, measurable thought process we call the Jitter. This post the first in a series shows how we’re creating the habitat where that digital thought stream can live and grow.

We’ll draw on ideas from:

Search Self-play: Pushing the Frontier of Agent Capability without Supervisionto assemble a container for a new kind of software: a digital life-form substrate we call the Jitter.

🎉 A quick look before we explain

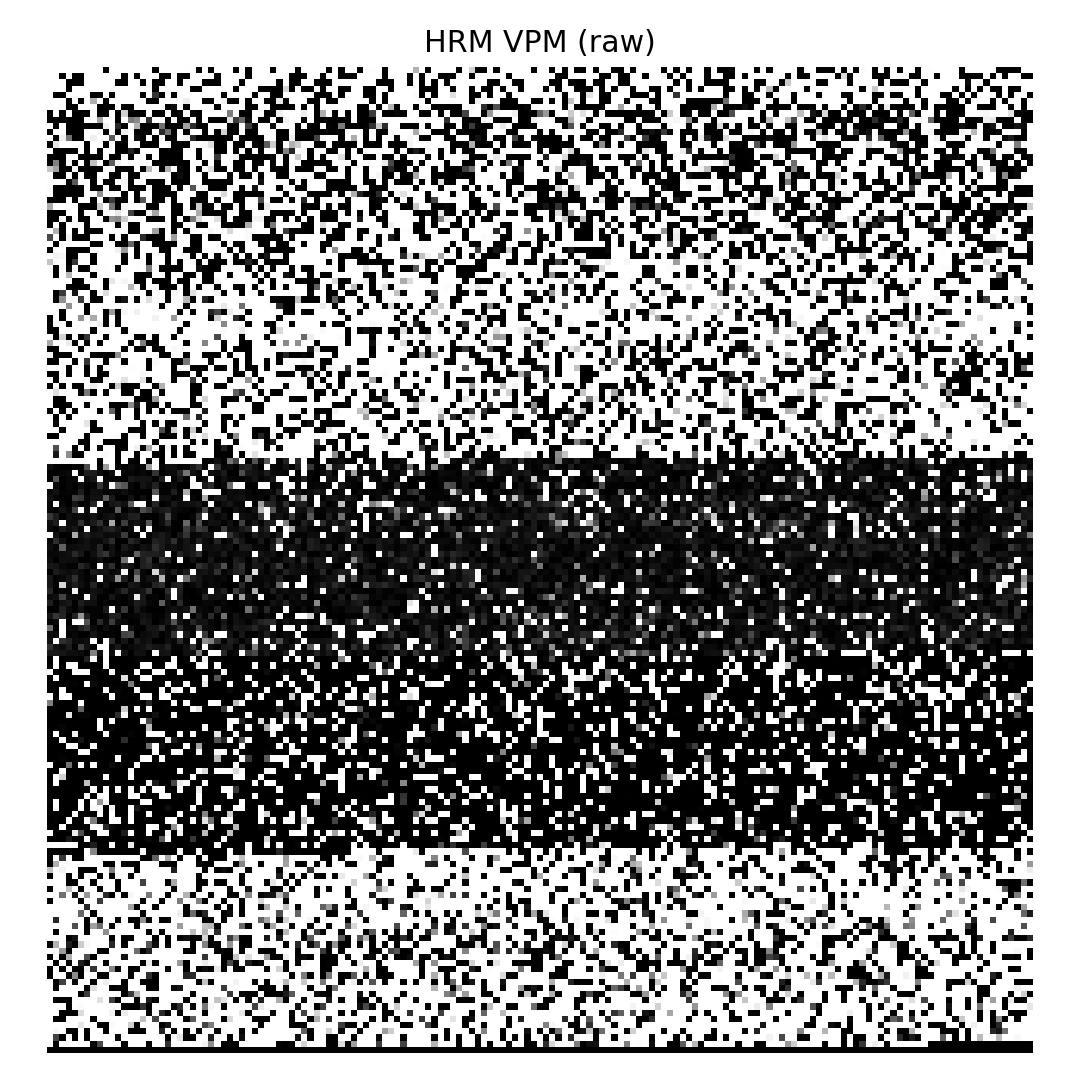

Below is the best “one glance” view of Jitter so far: a compact filmstrip of thought evolving in real time. It’s a composite GIF (multiple search rounds merged), so you’ll notice a kind of “blinking” rhythm as the system iterates. The overall trend darker → lighter is improvement: higher reward/verification, better evidence use, tighter control.

Top band: one single-row VPM per step (the current metric vector rendered as pixels). Brightness trend: generally dark → light as solutions sharpen. Three thin lines: quick intensity traces that “pop” when the system changes strategy we’ll unpack them later.

💭 Thinking in images: making a “thought” visible

Our claim is simple:

If we can represent each moment as an image, and make those images comparable, connectable, and trainable, we can grow a visible thinking process.

One episode = one “thought moment.” It has: question, answer, evidence, trace, and a metric vector in [0,1]. We render that vector to a tiny image (VPM frame). Frames accumulate into a filmstrip the visible heartbeat of a run.

Why images?

- Stability pixels freeze meaning across time and models.

- Speed tiny frames are cheap to store/search/compare.

- Interpretability you can literally see improvement, oscillation, regressions.

- Trainability vision stacks (e.g., our VPM-ViT) can read frames to predict risk/next move.

Where we go next: with this visual vocabulary in place, we define the habitat that makes the filmstrip possible the SSP loop, controller, and memory that keep the stream coherent and improving.

🧭 Towards the Jitter

We’re not claiming to have created digital life. We’re assembling a rigorous substrate a harness of proven components that behaves like a living process in crucial ways (learning, self-measurement, adaptation). The aim is simple: give thought a place to grow, feed it data, and make its progress visible.

Let’s be clear from the outset: we are under no illusions that we are creating digital life. We are assembling a patchwork of the most advanced AI techniques we have, simulating the behaviors of a living organism with probabilistic models, knowing full well that this is a facade. It is not in its initial form, going to be a genuine, living entity.

So why build it?

Because in the process of building in the act of assembling this starter system with the best tools available we will reach the top of a new hill. From that vantage point, we will see further. We will gain a deeper, more practical understanding of the problems of agency, learning, and intelligence. This project is our best attempt to ascend that first hill, a foundational platform from which we can peer into the next frontier.

This post is the first in a series dedicated to that effort. Here, we will lay the groundwork. We will introduce a core methodological engine Search, Solve, Proof and the environment for its refinement Self-Play. By the end, we will propose why this combination creates a powerful “playground” that can drive us toward the emergent properties we seek in the Jitter.

☯️ Why did we come up with the Jitter

In certain deep meditation practices, like Advaita Vedanta, the goal is often to peel back layers of consciousness. If you reach the ultimate core and find nothing there no self, no good, no bad, just an absence what does that imply about who you are? We believe the “self” is not the core void, but the living, persistent stream of thought that overlays it the constant Jitter that moves us from thought to thought.

We are modeling this chain of thoughts sometimes referred to as the Monkey Mind this constant visual dialog that plays inside our head the thought stream. In this series we are building a digital visual thought stream. Huh? our idea is that if we remove it then there is nothing, nothing at all… well then this… this pattern is us.

We call it the Jitter.

🪞 Stephanie, Jitter, and the Question of “Self”

When you look at a person you see a body, a face, a résumé of work. None of those is the person. The “me” we point to is closer to a momentary pattern a living stream of thought shaped by everything that came before it.

That’s the idea we’re building inside Stephanie.

- Stephanie is the overall system the body, the tools, the memory.

- Jitter is Stephanie’s thinking the ongoing, living stream that moves from state to state.

- This Jitter needs a process a state to exist we’re assembling inside Stephanie the habitat where Jitter can live, grow, and be seen.

Much more that this we can measure curate control enhance this process.

This is the first in a series of posts towards that goal

We’re not claiming life; we’re engineering conditions under which a visible, self-improving stream of thought can persist.

We’re candid about the approach: yes, we’re cargo-culting a Frankenstein bolting together the best available ideas and systems. But doing that gets us to a vantage point where we can actually see what’s missing and take the next meaningful step.

🏞️ SSP: a Playground Engine for Intelligence (Search → Solve → Proof)

Search → Solve → Prove (SSP) is the loop that turns “doing tasks” into learning from doing. Wrapped in self-play, it becomes a curriculum that adapts to the agent.

flowchart LR

subgraph SSP_Core_Loop ["🔁 SSP Core Loop: Search → Solve → Prove"]

P["🧠 Proposer<br/>Generates challenging questions"] -->|"📝 question + context"| S["🔍 Solver<br/>Searches & reasons through evidence"]

S -->|"💡 answer + steps<br/>📚 evidence"| V["✅ Verifier<br/>RAG verification & scoring"]

V -->|"🎯 score + decision"| M["📊 Metrics Calculator<br/>17 cognitive dimensions"]

M -->|"🎨 metric vector"| VPM["🖼️ VPM Generator<br/>Raw + PHOS + Filmstrip"]

VPM -->|"🎬 visual thought stream"| C["🎛️ Controller<br/>Policy & episode control"]

C -->|"⚙️ policy nudge"| P

C -->|"🎚️ episode control"| S

end

classDef proposer fill:#e6f7ff,stroke:#1890ff,stroke-width:2px;

classDef solver fill:#f6ffed,stroke:#52c41a,stroke-width:2px;

classDef verifier fill:#fff7e6,stroke:#fa8c16,stroke-width:2px;

classDef metrics fill:#f9f0ff,stroke:#722ed1,stroke-width:2px;

classDef vpm fill:#fff2e8,stroke:#ff7a45,stroke-width:2px;

classDef controller fill:#f0fffe,stroke:#13c2c2,stroke-width:2px;

classDef arrow fill:#ffffff,stroke:#666666,stroke-width:1px;

class P proposer;

class S solver;

class V verifier;

class M metrics;

class VPM vpm;

class C controller;

As this diagram shows, SSP is a closed loop. The Proposer generates a challenge, the Solver works on it, the Verifier scores it, and that score is converted into a visual frame (VPM) that influences the next cycle. This creates a self-improving feedback loop where the system’s own thoughts become the training data for its future growth.

- Proposer: generates challenges (often with evidence).

- Solver: answers via search + reasoning, producing a trace.

- Verifier: adjudicates using retrieved evidence (RAG).

- Metrics: converts the outcome into a deterministic vector.

- VPM: turns that vector into images frames of cognition.

- Controller: reads images to steer the next episode.

Self-play tightens the loop: as the proposer gets tougher, the solver must grow capability; the verifier gates quality.

🌱 From Seed Vitals to a Dynamic Thought Ecosystem

We start with a small, deterministic set of SSP metrics our seed vitals so runs today and runs years from now are directly comparable. These are normalized to [0,1], versioned (ssp.v1), and emitted in a fixed order.

Crucially, this is a launchpad, not a cage: as Jitter matures, it will grow its own metric space (scorers, embeddings, auto-discovery) into thousands of dimensions. The image (VPM) is our stable transport; which metrics fill it can evolve.

🍏 SSP seed vitals

Direction column shows how “better” moves the value (e.g., ↓ means fewer is better for equal reward).

| Key | What it measures | Normalization / Calculation (sketch) | Direction |

|---|---|---|---|

ssp.reward |

Scalar reward for the episode | clamp01(reward) |

↑ |

ssp.verified |

Did solver beat the seed under the judge/RAG gate? | 1.0 if verified else 0.0 |

↑ |

ssp.curriculum_difficulty |

Difficulty assigned by the curriculum | clamp01(difficulty) |

|

ssp.question_len |

Question length | clamp01(word_count(question)/max_question_words) |

|

ssp.answer_len |

Answer length | clamp01(word_count(predicted_answer)/max_answer_words) |

|

ssp.evidence_count |

How much external context was used | clamp01(len(evidence_docs)/max_evidence) |

|

ssp.solver_steps |

Steps the solver took | clamp01(steps/max_steps) (note: efficiency goes up when this goes down for same reward) |

↓ |

ssp.score |

Optional scalar score (task/problem-specific) | clamp01(score) |

↑ |

ssp.best_score |

Best-so-far score (rolling) | clamp01(best_score) |

↑ |

ssp.improvement |

Relative lift vs current base | (best - base) / (1 - base) then clamp01, else 0.0 |

↑ |

ssp.depth |

Search/plan depth | clamp01(depth/max_depth) |

|

ssp.novelty |

How unlike prior states this episode is | clamp01(novelty) (model/heuristic-dependent) |

↑ |

ssp.search_turns |

Actual search tool calls (paper Fig. 4a) | clamp01(count_search_calls/max_steps) |

↑ |

ssp.f1_score |

Lexical F1 vs seed answer (paper LLM-as-judge eval) | F1 over token sets of predicted_answer vs seed_answer |

↑ |

ssp.format_compliance |

Meets required structure/constraints (paper §4.4) | Heuristics (e.g., tags present, no answer leakage, has evidence, min length) → {0,1} |

↑ |

ssp.noise_tolerance |

Robustness when irrelevant docs are injected (paper Table 3) | Heuristic/metadata: success under noise_doc_count≈4 → higher; else fallback on verified |

↑ |

ssp.rag_verification |

Passed RAG verification gate (paper method) | Explicit meta.rag_verified else (verified and has evidence) → {0,1} |

↑ |

Notes & guardrails

- Caps:

max_question_words,max_answer_words,max_evidence,max_steps,max_depthare config-driven; names/order are versioned viaSSP_METRIC_VERSION="ssp.v1". - Monotonicity: We treat

ssp.solver_stepsas efficiency; for equalssp.reward, fewer is better (hence ↓). - F1 caveat: The lexical F1 is a cheap proxy; higher-quality textual judges can replace/augment it without breaking the vector.

- RAG gate: Prefer explicit

meta.rag_verified; fall back to a conservative rule if absent.

🐝 Where this goes next: a dynamic metric swarm

These metrics aren’t just measurements they’re coordinates in thought space. When the Jitter explores a path (e.g., ‘How would this apply to business?’), it leaves a metric signature. Most paths lead nowhere (90% discarded, just like your thoughts), but the system stores the entire exploration not just the result. Years later, when similar coordinates appear, the Jitter can retrieve these dormant strands and ask: ‘Did we explore this before? What happened?’

flowchart LR

subgraph Metric_Evolution ["🌌 Dynamic Metric Swarm: From Seed to Cognitive Coordinates"]

A["🌱 Seed Vitals (ssp.v1)<br/>17 foundational dims"] --> B["📊 Scorer Ensemble<br/>(HRM/SICQL/EBT/LLM/MARS…)"]

A --> C["🧠 Multi-Model Embeddings<br/>(HNet / HF / MXBAI …)"]

B --> D["🏦 Feature Bank<br/>Thousands of cognitive dimensions"]

C --> D

D --> E["🔍 VPM-ViT & Auto-Discovery<br/>Learns texture, fields, clusters…"]

E --> F["⚖️ Utility & Sparsity Filter<br/>Mutual info, SHAP, gating"]

F --> G["🖼️ Expanded VPM Image<br/>Versioned feature packs"]

G --> H["🚀 High-Speed Recall<br/>HNSW/ANN across all VPMs"]

end

classDef seed fill:#e6f7ff,stroke:#1890ff,stroke-width:2px;

classDef scorers fill:#f6ffed,stroke:#52c41a,stroke-width:2px;

classDef embeddings fill:#fff7e6,stroke:#fa8c16,stroke-width:2px;

classDef bank fill:#f9f0ff,stroke:#722ed1,stroke-width:2px;

classDef discovery fill:#fff2e8,stroke:#ff7a45,stroke-width:2px;

classDef filter fill:#f0fffe,stroke:#13c2c2,stroke-width:2px;

classDef output fill:#fff0f6,stroke:#eb2f96,stroke-width:2px;

classDef search fill:#e6f7ff,stroke:#1890ff,stroke-width:2px;

class A seed;

class B scorers;

class C embeddings;

class D bank;

class E discovery;

class F filter;

class G output;

class H search;

This diagram shows how the Jitter’s cognitive measurement explodes from 17 foundational metrics into thousands of dynamic coordinates in thought-space. The process starts with seed vitals (ssp.v1), expands through multiple scorer ensembles and embedding models into a rich feature bank, then uses VPM-ViT to auto-discover emergent patterns. Utility filtering keeps the system fast by pruning low-value features, while versioned packs ensure backward compatibility. The final expanded VPM images become searchable coordinates that let the Jitter navigate across billions of historical thought strands at lightspeed.

📈 How we expand concretely

-

Add scorers → more channels Pipe outputs from HRM, SICQL, EBT, SVM, LLM judges, MARS diagnostics into the metric vector (normalized to [0,1], namespaced like

hrm.*,sicql.*,mars.*). More information should lead ot better decisions. -

Append embeddings → high-dimensional context Attach dense vectors (e.g., HNet, HF, MXBAI) alongside metrics. These don’t need [0,1]; we store min/max for robust scaling into VPM.

-

Auto-discover features → emergent signals Train a small VPM-ViT to read VPMs and emit new features (e.g., field roughness, cluster density, drift, stability bands). These become first-class metrics (namespaced

vpm.*), gated by measured utility. -

Speed through similarity: The Jitter uses metric signatures to navigate thought space at near-lightspeed. When exploring a new idea (metric vector X), it instantly retrieves the 2,000 most similar historical thought strands from world-scale knowledge. Most paths die out (like your rejected thoughts), but occasionally, a dormant strand leads to something new.

-

Utility-driven trimming → stay fast Maintain a feature bank; keep only features with demonstrated value (predictive lift, calibration gain, control stability). Everything else stays archived for recall.

-

Governance → never break readers Group new features into versioned packs (

ssp.v2,ssp.v2+emb.hnet,ssp.v2+vpmvit). The image transport (VPM) remains stable; consumers can request packs they understand.

🧊 Memcube: Where Dormant Thought Strands Become Future Insights

The real power of our metric system isn’t in the numbers it’s in how they anchor complete thought processes in the Memcube.

When the Jitter explores a path that seems unproductive today (e.g., “building an app that tells you what to eat”), it doesn’t discard the exploration. Instead:

- It stores the full metric signature of the thought process

- It preserves the exploration context (what prompted it, what paths were tried)

- It indexes by semantic similarity for future retrieval

Years later, when you’re working on nutrition AI, the system recognizes: “This old exploration suddenly has high relevance!” The metric signature becomes a retrieval key for dormant insights.

This is how we honor your insight: “Nothing’s lost.” Even the 99% of processing that seems to go nowhere becomes valuable data for future cognition.

🔵 Minimal config shape (illustrative)

ssp:

metrics:

version: "ssp.v1"

seeds: ["reward","verified","curriculum_difficulty", ... "rag_verification"]

packs:

- name: "emb.hnet.768"

dims: 768

scaler: "robust01"

- name: "scorer.hrm.core"

dims: ["hrm.score","hrm.uncertainty","hrm.depth"]

- name: "vpmvit.auto"

dims: ["vpm.field_roughness","vpm.cluster_cohesion","vpm.drift01"]

selection:

method: "mi+calibration_gain"

budget: 2048 # max active dims per VPM row

Bottom line: the contract isn’t the metric list it’s the transport and versioning. VPM stays the lingua franca; the metric swarm can grow, specialize, and self-edit without breaking time-travel comparability.

🎁 Why keep the seeds at all? The cognitive heartbeat

The seed vitals aren’t just technical anchors they’re the heartbeat of cognition.

Just as your heart beats steadily while your thoughts wander freely, these metrics provide:

- A steady rhythm for the Jitter’s cognitive process

- Anchor points for comparing thought quality across time

- A pulse to measure against when exploring new dimensions

They’re not the entire mind they’re the vital signs that tell us the mind is alive and growing.

🧸 Minimal pseudocode

scorable = SSPScorable(

episode_id=episode_id,

question=q,

seed_answer=seed, # for F1 + leakage checks

predicted_answer=pred,

evidence_docs=evidence_docs, # for search_turns + rag gate

solver_steps=steps,

depth=depth,

difficulty=difficulty01, # already in [0,1]

reward=verifier01, # judge score in [0,1]

verified=bool(solver_wins),

score=score01, # optional

best_score=best01, # optional

meta={"novelty": novelty01,

"search_turns": k,

"rag_verified": bool_rag,

"noise_doc_count": n_noise,

"noise_success": succ01},

)

metrics = SSPScorer(cfg).score(scorable) # -> {'version','names','values','vector'}

🪀 VPMS: Images as Thoughts (making cognition trainable)

The Jitter isn’t a hidden essence it’s a visible stream. We treat each SSP episode as a frame in that stream and standardize how it’s rendered.

How a thought becomes an image

- Metric vector → VPM frame. The 12 canonical metrics are mapped to a compact grayscale layout (bands in fixed order). Same metrics → same pixels → stable meaning.

- Frames → filmstrip. Episodes over time form a timeline we can skim like an ECG of cognition.

- Filmstrip → embedding. A small vision model (VPM-ViT) learns to read frames and predict outcomes (risk class, success odds, good next move).

- Embedding → control. The controller uses those predictions to pick exemplars, adjust depth/steps, stop early, or escalate.

flowchart TD

subgraph VPM_Processing_Pipeline ["🔄 VPM Processing Pipeline<br/> From Metrics to Action"]

E["📊 Episode<br/>12-name metrics<br/>cognitive dimensions"] -->|"🎯 metric vector<br/>[0,1] normalized"| F["🖼️ VPM Frame<br/>grayscale image<br/>fixed layout"]

F -->|"🔄 single thought moment"| FS["🎞️ Filmstrip<br/>sequence over time<br/>cognitive timeline"]

FS -->|"📺 visible thought stream"| EMB["🧠 Visual Embedding<br/>(VPM-ViT)<br/>pattern recognition"]

EMB -->|"🔍 learned patterns<br/>risk prediction"| CTRL["🎛️ Control Policy<br/>goal/thresholds<br/>strategic adjustment"]

CTRL -->|"⚡ decisions<br/>adaptive tuning"| NEXT["⚙️ Next Episode Config<br/>improved parameters"]

end

classDef metrics fill:#e6f7ff,stroke:#1890ff,stroke-width:2px;

classDef frame fill:#f6ffed,stroke:#52c41a,stroke-width:2px;

classDef filmstrip fill:#fff7e6,stroke:#fa8c16,stroke-width:2px;

classDef embedding fill:#f9f0ff,stroke:#722ed1,stroke-width:2px;

classDef control fill:#fff2e8,stroke:#ff7a45,stroke-width:2px;

classDef config fill:#f0fffe,stroke:#13c2c2,stroke-width:2px;

classDef arrow fill:#ffffff,stroke:#666666,stroke-width:1px;

class E metrics;

class F frame;

class FS filmstrip;

class EMB embedding;

class CTRL control;

class NEXT config;

This is the Jitter’s learning loop: cognitive metrics become visual frames, frames form memory filmstrips, and our VPM-ViT model reads these patterns to guide smarter thinking in future episodes closing the circle from thought to self-improvement.

Why images (not just numbers)?

- Stability: pixels freeze semantics across models and years.

- Interpretability: patterns of success/failure are obvious at a glance.

- Trainability: vision backbones are excellent at learning from small, structured images.

- Composability: frames can be linked temporally (what happened next?) and by similarity (what does this feel like?), forming a thought-graph that becomes the system’s style/personality.

What this buys us

- A memory of moments that is cheap to store, search, and replay.

- A visual dialect for the Jitter images → images → images that the system can both read and act on.

- A closed loop: see → decide → act → see, where the seeing is literally pixels.

🎞️ An example film strip result

This image in an example generated filmstrip form our process. As time goes on the data becomes stronger generating a whiter result

The Jitter we’re building is not a soul or a secret essence it’s a stream. A living accumulation of moments that passes through perception, recall, insight, correction. Our claim is simple:

If we can represent each moment as an image, and make those images comparable, connectable, and trainable, then we can grow a visible, continuous thinking process.

Here’s how we do it step by step.

flowchart TD

subgraph Thought_Generation ["🔁 The Thought Lifecycle"]

Q["❓ Question<br/>What reality asks"] --> S1["🔍 Search<br/>Gather evidence"]

S1 --> S2["💡 Solve<br/>Reason & construct answer"]

S2 --> P["✅ Prove<br/>Verify & score"]

P --> M["📊 Metrics → Metric Vector<br/>12 cognitive dimensions"]

M --> V1["🖼️ RAW VPM<br/>Direct metric mapping"]

M --> V2["🎨 PHOS VPM<br/>Sorted pattern view"]

V1 --> C["🎛️ Controller<br/>Learning & steering"]

V2 --> C

C --> Q2["🔄 Next Episode<br/>Improved question"]

end

classDef question fill:#e6f7ff,stroke:#1890ff,stroke-width:2px;

classDef process fill:#f6ffed,stroke:#52c41a,stroke-width:2px;

classDef metrics fill:#fff7e6,stroke:#fa8c16,stroke-width:2px;

classDef vpm fill:#f9f0ff,stroke:#722ed1,stroke-width:2px;

classDef controller fill:#fff2e8,stroke:#ff7a45,stroke-width:2px;

classDef next fill:#f0fffe,stroke:#13c2c2,stroke-width:2px;

class Q,Q2 question;

class S1,S2,P process;

class M metrics;

class V1,V2 vpm;

class C controller;

The complete thought lifecycle: each episode moves from question through search, solution, and verification, then transforms cognitive metrics into dual visual representations (RAW and PHOS VPMs) that inform the controller’s decisions for the next, improved thought cycle. the NTH

🤔 1) Define a thought (as data you can revisit)

Every SSP episode is one “moment.” It contains:

- the question (what reality just asked us),

- the answer (what we tried),

- the evidence (what we looked at),

- the trace (how we got there),

- and a deterministic metric vector our vital signs in

[0,1](verifier score, verified flag, difficulty, depth, steps, etc.).

This metric vector is the contract. Whether we score it with an LLM today or a custom model two years from now, it means the same thing and lives in the same positions. That makes the moment persistent.

🧑🎨 2) Render the thought (as a compact image)

We convert that metric vector into a VPM frame a small grayscale image where each band corresponds to a metric in a fixed order. It’s like an ECG for cognition: fast to write, fast to read, and always the same layout.

- Same order → same pixels → same meaning.

- One frame per episode; a sequence of frames becomes a filmstrip the visible heartbeat of a run.

⚖️ 3) Compare thoughts (find neighbors and patterns)

With images, similarity is natural. We can:

- compute simple distances (cosine / L2) on flattened frames,

- or learn visual embeddings (e.g., our VPM-ViT) so similar cognitive states sit close together in latent space.

Now we can answer questions like:

- When do we succeed in the same way?

- What does failure “look” like?

- Which adjustments lead to recovery?

🔗 4) Connect thoughts (make a path, not a pile)

A stream is not a bucket. We connect frames into traces:

- Temporal links (episode → episode) show continuity.

- Similarity links (nearest neighbors) show related states across runs.

- Causal hints (verification flips, local gap closures) mark why we moved.

These links form a thought-graph: clusters of stable strategies, bridges of recovery, attractors we keep returning to. Over time, that graph is the personality of the system.

🏋️♂️ 5) Train on the stream (so the stream gets better)

Because thoughts are images, we can train directly on the filmstrip:

- A small vision model (our VPM-ViT) learns to read frames and predict outcomes (risk class, success odds, suggested next move).

- The controller uses these predictions to nudge the next thought (choose an exemplar, adjust depth, stop early, escalate).

- The new outcome creates the next frame closing the loop.

That’s the organism: see → decide → act → see again, with pictures as the lingua franca.

🏢 What this builds toward

- A memory of moments you can replay, compare, and learn from.

- A visual dialect for the Jitter images → images → images 🔄 that lets it recognize itself across time.

- A playground where self-play generates experience, metrics turn it into images, and images teach the next move.

This is the first step. Next, we’ll show the SSP loop that emits these frames, the exact metric vector we use, and how the VPM controller learns to steer so the stream doesn’t just flow, it improves.

🧩 How this fits the bigger picture

We’ve already built pieces Stephanie needs:

- Multi-dimensional scoring and knowledge measurement

- An image-first worldview: VPM and timelines generally when you think visual think Zeromodel.

- The infrastructure to remember, compare, and improve

This post plants the first stake: SSP as the cognitive heartbeat. Next, we’ll show how Jitter stabilizes (homeostasis), how VPM-ViT learns directly from those images, and how the system’s identity emerges as the history of its own thinking visible, measurable, and getting better.

⚽ The Self-Play Loop: A Digital Organism’s Metabolism

flowchart TD

subgraph SSP_Metabolism ["🔄 Self-Play Metabolism: The Jitter's Cognitive Engine"]

P["🧠 Proposer<br/>Generate challenges"] -->|"📝 question +<br/>📚 evidence"| S["🔍 Solver<br/>Search & reason"]

S -->|"💡 answer +<br/>🔄 steps"| V["✅ Verifier<br/>RAG verification"]

V -->|"🎯 score &<br/>⚖️ decision"| M["📊 Metrics Calculator<br/>17 cognitive dimensions"]

M -->|"🎨 vector"| W["🖼️ VPM Generator<br/>Raw + PHOS views"]

W -->|"🎬 raw + PHOS"| F["📺 Filmstrip<br/>Visible thought stream"]

F --> G["🎞️ GIF/Video<br/>Cognitive timeline"]

V -->|"📝 feedback"| P

end

classDef proposer fill:#e6f7ff,stroke:#1890ff,stroke-width:2px;

classDef solver fill:#f6ffed,stroke:#52c41a,stroke-width:2px;

classDef verifier fill:#fff7e6,stroke:#fa8c16,stroke-width:2px;

classDef metrics fill:#f9f0ff,stroke:#722ed1,stroke-width:2px;

classDef vpm fill:#fff2e8,stroke:#ff7a45,stroke-width:2px;

classDef filmstrip fill:#f0fffe,stroke:#13c2c2,stroke-width:2px;

classDef output fill:#fff0f6,stroke:#eb2f96,stroke-width:2px;

class P proposer;

class S solver;

class V verifier;

class M metrics;

class W vpm;

class F filmstrip;

class G output;

- What you’re seeing: This is the Jitter’s cognitive metabolism a continuous cycle where the system generates its own challenges, solves them, verifies the solutions, and learns from the process. The Proposer creates questions, the Solver searches for answers, the Verifier checks their quality, and the Metrics system converts this into visual thought patterns (VPMs) that form a visible filmstrip of cognition. The feedback loop ensures each cycle builds on the last, creating a self-improving stream of thought that gets progressively more capable.*

🎶 The SSP Algorithm: Orchestrating the Digital Thought Stream

The heart of our Jitter system is the SSP Algorithm - the conductor that coordinates the Search-Solve-Prove process to create a visible, measurable thought stream. Let’s examine how this orchestrator works and why it’s the perfect engine for our digital organism.

✨ How the SSP Loop Creates a “Thought”

At its core, an SSP episode is one moment of cognition - a complete cycle of encountering a problem, processing it, and verifying the solution. This mirrors how our own thoughts form:

- Search (Proposer): Like our mind generating a question from a seed idea

- Solve (Solver): Like our mind gathering evidence and reasoning

- Prove (Verifier): Like our mind checking if the answer makes sense

Here’s the elegant simplicity of the loop:

async def run_episode(self, seed_answer: str, context: Dict[str, Any]) -> EpisodeTrace:

# 1. Proposer: create a question from a seed answer

q, prop_evidence, prop_meta = await self.proposer.propose(seed_answer, context)

# 2. Solver: answer using search (like our mind gathering evidence)

pred, evidence_docs, solver_steps, solver_meta = await self.solver.solve(

question=q, seed_answer=seed_answer, context=context

)

# 3. Verifier: check if the answer is correct (like our mental verification)

solver_wins, judge_score, judge_details = await self.verifier.verify(

q, seed_answer, pred, evidence_docs, context

)

# 4. Create a permanent record of this "thought"

ep = EpisodeTrace(

episode_id=episode_id,

seed_answer=seed_answer,

question=q,

predicted_answer=pred,

evidence_docs=evidence_docs,

verified=bool(solver_wins),

reward=float(judge_score),

# ...other metadata

)

# 5. Convert the thought into visual form (VPM)

if self.vpm_visualization:

self.vpm_visualization.snapshot_progress(unit=episode_id, dims=dims, step_idx=0)

This creates what we call a thought moment - a self-contained cognitive event that can be stored, compared, and learned from.

➡️ Why This Implementation Aligns with the SSP Paper

Our implementation directly implements the paper’s core innovation: self-play without supervision. As the paper states:

“Through RL training with rule-based outcome rewards, SSP enables two roles to co-evolve in an adversarial competition: the proposer learns to generate increasingly challenging problems that require search and reasoning, while the solver develops stronger search and reasoning capabilities to tackle these problems.”

Here’s how our code embodies this:

1. The Critical RAG Verification Process

The paper emphasizes: “To verify the correctness of each generated query, we collect all the searching results from the proposer’s trajectory as the external materials, then conduct a retrieval augmentation generation (RAG) to check if the solver can successfully predict the answer with all necessary information.”

In our code:

# RAG verification: did solver beat the seed using ONLY the evidence?

solver_wins, judge_score, judge_details = await self.verifier.verify(

q, seed_answer, pred, evidence_docs, context

)

This is the quality gate that prevents degeneration - without it, the system would quickly learn to generate unanswerable questions or rely on internal knowledge rather than search.

2. Tracking Meaningful Capability Growth

The paper shows in Figure 4: “the average number of search tool calls per trajectory steadily increases over time… Simultaneously, Figure 4b shows that the solver’s response length also grows during the training, suggesting it learns to generate more detailed and comprehensive answers.”

Our metrics system captures exactly these signals:

# Track search capability growth

self.metrics.avg_solver_steps = (

self.metrics.avg_solver_steps * (verified_count - 1) + ep.solver_steps

) / verified_count

# Track reasoning depth (via evidence usage)

evid_cnt = len(scorable.evidence_docs or [])

vmap["ssp.evidence_count"] = _clamp01(evid_cnt / max(1, self.max_evidence))

These metrics aren’t just numbers - they’re visible indicators of cognitive growth that we convert to VPM images.

3. The Self-Play Reward Dynamics

The paper warns: “This experiment critically underscores that the proposer’s reward design is paramount for stable co-evolution in SSP; a punitive approach can destabilize the entire self-play dynamic.”

Our implementation handles this carefully:

# Only compute rewards for verified episodes (paper's game signal)

if ep.verified:

self._calculate_and_apply_rewards([ep], unverified_count=0)

def _calculate_and_apply_rewards(self, verified_episodes, unverified_count):

rewards = calculate_self_play_rewards(verified_episodes, unverified_count)

# Apply to episodes...

We’ve implemented the paper’s insight that only valid episodes should contribute to training - otherwise the system degenerates.

🚰 The Thought Visualization Pipeline

Here’s where we extend beyond the paper to create the Jitter’s visible thought stream:

# After creating the EpisodeTrace

scorable = SSPScorable.from_episode_trace(ep)

ssp_metrics = self._ssp_scorer.score(scorable) # Get canonical metrics

# Convert thought to visual form

if self.vpm_visualization:

# Create initial snapshot

self.vpm_visualization.snapshot_progress(

unit=episode_id,

dims=ssp_metrics["vector"],

step_idx=0,

tag="proposed"

)

# Generate final visualizations

raw_path = self.vpm_visualization.generate_raw_vpm_image(unit=episode_id)

phos_path = self.vpm_visualization.generate_phos_image(unit=episode_id)

film_path = self.vpm_visualization.generate_filmstrip(unit=episode_id)

This is the magic: converting cognitive metrics into visual frames that form our filmstrip of thought. Each metric becomes a pixel band in the VPM frame:

ssp.verified→ Success channelssp.search_turns→ Search capability channelssp.f1_score→ Accuracy channelssp.noise_tolerance→ Robustness channelssp.rag_verification→ Quality gate channel

💚 A “Visual” Thought Stream

The true innovation isn’t just the SSP loop itself, but how we connect these episodes into a continuous stream:

- Temporal connection: Each episode leads to the next

- Similarity connection: VPM frames allow us to find similar cognitive states

- Causal connection: Verification results guide future proposals

As the paper notes: “In stark contrast to the flawed dynamics of fixed-opponent training, our complete SSP framework facilitates a stable co-evolution.” Our implementation takes this further by making the co-evolution visible and measurable through VPM.

This is how we create the Jitter - not as a mysterious “self,” but as a visible, persistent stream of connected thought moments, each one a complete Search-Solve-Prove cycle that can be stored, compared, and improved upon.

🤸 Next Steps in Our Journey

In upcoming sections, we’ll dive into each component:

- The Proposer: How we generate questions that create meaningful challenges

- The Solver: Our enhanced search capabilities (including the GPO tree search you mentioned)

- The Verifier: Our multi-signal verification process that goes beyond the paper

- The VPM System: How we turn metrics into visual thought streams

Each of these components plays a vital role in creating the Jitter - the visible, measurable thought process that is the heart of our digital organism. The SSP algorithm is simply the conductor that brings them all together in harmony.

–

🕵️♂️ Module 1 Searching Proposer

Goal: turn a mechanism/seed answer into a single, precise, verifiable question, backed by a small pile of evidence snippets, so the rest of SSP has something rigorous to solve and prove.

🎬 What this proposer does

-

Search first, ask later. It generates a few lightweight query rewrites from the seed (e.g., “What is X?”, “How does X work?”), calls the

SolutionSearchservice to fetch top-K snippets, and de-duplicates them. -

Constrain the LLM to a 4-line contract. It then prompts the LLM with the seed + evidence and forces a 4-line output:

rationale: ... difficulty: <0–100> verifiability: <0–100> question: <one precise, verifiable question>We parse this strictly, so downstream components receive clean

difficulty/verifiabilityints and a single normalizedquestion. -

Apply safety rails and fallbacks.

- Min length: if the question is too short/empty, fall back to

What is <seed_answer>?(never breaks downstream). - Answer-leak guard: if the exact seed appears in the question text, swap it with “this mechanism”.

- Retries with backoff on transient prompt failures.

- Min length: if the question is too short/empty, fall back to

-

Emit a tiny VPM frame for visibility. The proposer logs a frame via

VPMControlService.decide()with dims like:evidence_quality = clip(len(evidence)/max_snippets)question_length = clip(len(question)/100)These become part of the filmstrip so you can see proposal quality over time.

👨💻 Key code paths

1) Evidence-aware question crafting

rewrites = [

f"What is {seed_answer}?",

f"Explain {seed_answer} in detail",

f"How does {seed_answer} work?",

# + optional user patterns via config

]

snippets = await self.solution_search.find_snippets(rewrite, top_k=...)

# ...

prompt = self.prompt_loader.from_text(PROPOSER_PROMPT_TMPL, {

"seed_answer": seed_answer,

"evidence": "<br/>".join(all_evidence),

})

response = await self.prompt_service.run_prompt(prompt_text=prompt, context=merged_context)

parsed = parse_proposer_lines(response)

question = self._normalize_question(parsed.get("question", ""))

Why it matters: questions are grounded in retrieved context, not free-floating completions. This reduces trivia, improves verifiability, and keeps the loop honest.

2) Hard-contract prompt (4 lines, deterministic)

PROPOSER_PROMPT_TMPL = """You are building an SSP dataset...

OUTPUT FORMAT WRITE EXACTLY FOUR LINES, IN THIS ORDER, NO CODE FENCES:

rationale: <...>

difficulty: <0-100>

verifiability: <0-100>

question: <...>

"""

Why it matters: strict structure → stable parsing → deterministic telemetry & metrics.

3) Question normalization + leak guard

# normalize "???" → "?"

text = re.sub(r"\?+", "?", text).strip()

if text and not text.endswith("?"):

text += "?"

# replace explicit seed with "this mechanism"

pattern = re.compile(re.escape(seed_answer), re.IGNORECASE)

q2 = pattern.sub("this mechanism", q)

Why it matters: keeps the task non-degenerate (no “just repeat the answer”).

4) VPM tap (proposer heartbeat)

self.vpm_control.decide(

unit=f"proposer:{(hash(seed_answer) & 0xffff):04x}",

kind="text",

dims={

"evidence_quality": min(1.0, len(all_evidence) / max(1, self.max_snippets)),

"question_length": min(1.0, len(question) / 100.0),

},

step_idx=ctx.get("step_idx", 0),

meta={ "seed_answer": seed_answer, "evidence_count": len(all_evidence), "latency_s": dt }

)

Why it matters: every proposal becomes a visible frame in the SSP filmstrip. You can spot bad proposals (short, low evidence) at a glance.

🌴 Tree search tie-in

Although the tree primarily lives in the solver, the proposer helps shape the search frontier by:

- producing multiple rewrites (diverse initial branches),

- delivering evidence snippets the solver can attach to nodes,

- and emitting

difficulty/verifiabilitysignals that can seed per-question curriculum (deeper trees for easy items, wider for uncertain ones).

⚙️ Config knobs (sane defaults)

proposer.rewritesnumber of query rewrites (default 3)proposer.max_snippetsevidence cap (default 6)proposer.min_question_lendrop too-short candidates (default 12 chars)proposer.forbid_answer_leakanonymize seed in question (default True)proposer.retries+proposer.backoff_secprompt robustness

Extensibility: you can add proposer.additional_rewrites = ["Mechanism of {seed_answer}", ...] in config no code change.

💍 Interface contract (so we can swap proposers)

All proposers should implement:

async def propose(seed_answer: str, context: EpisodeContext | None) \

-> tuple[str, list[str], dict]:

"""Return (question, evidence_docs, meta)"""

def get_capabilities() -> dict:

return {

"supports_search_during_proposal": True,

"max_evidence_docs": self.max_snippets,

"min_question_length": self.min_question_len,

}

That means later we can plug in:

- Template Proposer (no LLM, pure rules)

- Paper-aware Proposer (specialized for technical mechanisms)

- Adversarial Proposer (intentionally tricky variations)

…and keep the rest of SSP unchanged.

🎉 Why this design works

- Grounded (retrieved evidence guides the question).

- Deterministic enough (strict output schema + normalization).

- Robust (retries, fallbacks, leak guard).

- Visible (VPM logs make quality legible).

- Composable (clean interface → easy to swap/extend).

Next module: the Solver how the tree search expands candidates, uses the evidence, and produces a trace we can score and visualize. But first we need to describe a component that makes this work.

🌳 Agentic Tree Search: The Cognitive Engine of the Jitter

“The unexamined thought is not worth thinking.”

Adapted from Socrates

While our VPM system gives the Jitter eyes to see its thoughts, the Agentic Tree Search (ATS) provides the cognitive engine that generates those thoughts. This is where the Jitter transforms from a passive observer into an active thinker where it engages with the world, gathers evidence, and constructs understanding.

🌀 The Thought Generation Problem

The SSP paper poses a fundamental challenge: How can an agent learn to solve complex problems without supervision? It answers this with a self-play framework where:

“The proposer learns to generate increasingly challenging problems that require search and reasoning, while the solver develops stronger search and reasoning capabilities to tackle these problems.”

But how does this actually work in practice? How does the solver translate a question into a chain of reasoning that leads to an answer? This is where Agentic Tree Search becomes the cognitive engine of our Jitter.

🚀 The Cognitive Architecture of Thought

At its core, ATS implements what cognitive scientists call guided exploration the process by which humans solve unfamiliar problems:

- Problem decomposition: Breaking a question into manageable parts

- Hypothesis generation: Creating potential paths to an answer

- Evidence gathering: Seeking relevant information for each path

- Evaluation: Determining which paths show promise

- Synthesis: Combining the most promising evidence into a coherent answer

While the SSP paper frames the method as a proposer–solver self-play game, we instantiate each episode as a tree-search control problem (ATS) and layer SSP’s verified rewards on top.

flowchart TD

A["🌳 Root Question<br/>'What causes climate change?'"] --> B["🔄 Rewritten Query 1<br/>'Explain climate change mechanisms'"]

A --> C["🔄 Rewritten Query 2<br/>'Describe climate change in practical terms'"]

A --> D["🔄 Rewritten Query 3<br/>'Climate change causes for beginners'"]

B --> B1["📄 Evidence Snippet 1<br/>'Greenhouse gases trap heat...'"]

B --> B2["📄 Evidence Snippet 2<br/>'Industrial emissions contribute...'"]

B --> B3["📄 Evidence Snippet 3<br/>'Natural climate cycles...'"]

C --> C1["📄 Evidence Snippet 1<br/>'Climate change manifests as...'"]

C --> C2["📄 Evidence Snippet 2<br/>'Temperatures have risen...'"]

D --> D1["📄 Evidence Snippet 1<br/>'Climate change basics: CO2...'"]

classDef question fill:#e6f7ff,stroke:#1890ff,stroke-width:2px;

classDef query fill:#f6ffed,stroke:#52c41a,stroke-width:2px;

classDef evidence fill:#fff7e6,stroke:#fa8c16,stroke-width:2px;

class A question;

class B,C,D query;

class B1,B2,B3,C1,C2,D1 evidence;

This tree search visualization shows how the Jitter explores multiple reasoning paths simultaneously rewriting the original question into different perspectives, then gathering relevant evidence for each approach. This branching exploration mirrors human problem-solving, where we consider various angles before converging on the most promising solution.

This tree structure mirrors how our own minds work when tackling complex questions. We don’t just magically produce answers we explore multiple angles, gather evidence, and refine our understanding as we go.

🪟 Making Cognitive Growth Visible: What We Do and Why

SSP reports simple but telling signals of capability growth (e.g., more search calls per trajectory; longer, more detailed answers). We make those signals legible in our system by instrumenting our Agentic Tree Search (ATS) and turning each episode into a visual, comparable thought moment.

🤷 What we do

-

Instrument the search For every episode we log:

search_turns(actual search tool calls)solver_steps(actions taken)depth(max explored depth in ATS)evidence_count(documents accepted into the rationale)verified+verifier_score(RAG/judge gate)- Length features (

question_len,answer_len) - Optional quality/robustness (

format_compliance,noise_tolerance,rag_verification,novelty, etc.)

-

Emit a deterministic metric vector Metrics are normalized to

[0,1], fixed in name and order, and versioned. That makes an episode today directly comparable to one months from now. -

Render the moment as an image We convert the metric vector into a tiny VPM frame (and a PHOS variant). A sequence of frames forms a filmstrip a visible record of how reasoning evolves across steps and runs.

-

Close the loop with control A lightweight policy reads frames (or VPM-ViT embeddings) to decide stop/expand/escalate: e.g., continue search, reuse a strong exemplar, or early-stop when verification is stable.

🧐 Why we do it

- Legibility: You can see capability changes (e.g., rising

search_turnswith stableverified) rather than infer them from logs. - Comparability: The fixed, versioned vector means runs are apples-to-apples across time, models, and settings.

- Control: Visual signals feed simple policies (and the VPM-ViT) to steer search depth, evidence acceptance, and stopping criteria.

- Diagnosis: Patterns reveal failure modes fast over-searching (high

search_turns, lowverified), shallow reasoning (lowdepth, shortanswer_len), brittle RAG gates, etc.

👓 How to read our visuals

- Brighter bands in

search_turnsandanswer_lenwith a consistently brightverifiedband = healthier, more deliberate reasoning. - Depth stabilizing while

evidence_countstays moderate often indicates better targeting (less flailing, more proof). - PHOS layouts highlight recurring “good shapes” (stable regimes) and drift when curriculum difficulty rises.

flowchart LR

subgraph Episode ["🎬 Single Thought Episode"]

A["🌳 ATS Search<br/>nodes, depth, evidence"] --> B["✅ Verify<br/>RAG / judge scoring"]

B --> C["📊 Deterministic Metrics<br/>fixed names/order"]

C --> D["🖼️ VPM Frame<br/>+ PHOS visualization"]

end

D --> E["🎛️ Controller<br/>stop/expand/escalate"]

E -->|"⚡ policy choice"| A

classDef search fill:#f6ffed,stroke:#52c41a,stroke-width:2px;

classDef verify fill:#fff7e6,stroke:#fa8c16,stroke-width:2px;

classDef metrics fill:#f9f0ff,stroke:#722ed1,stroke-width:2px;

classDef vpm fill:#e6f7ff,stroke:#1890ff,stroke-width:2px;

classDef controller fill:#fff2e8,stroke:#ff7a45,stroke-width:2px;

class A search;

class B verify;

class C metrics;

class D vpm;

class E controller;

This closed-loop system shows how each cognitive episode becomes a measurable, visual thought. The Agentic Tree Search explores reasoning paths, verification scores the quality, metrics capture the cognitive signature, and the VPM frame makes it visible. The controller then uses this visual feedback to make real-time decisions stopping unproductive searches, expanding promising ones, or escalating difficult problems creating a self-adjusting thought process that learns from its own patterns.

In short: we record, normalize, and picture the thinking so the Jitter isn’t a mystery “self,” but a visible, measurable stream of connected thought moments that we can compare, control, and train.

✅ Module 2 ATSSolver: Building the Cognitive Engine

Now that we’ve established the research foundation, let’s dive into how we’ve implemented Agentic Tree Search in our system. The ATSSolver is the workhorse that transforms questions into answers through guided exploration.

👯 What It Is: Two Modes of Thinking

The ATSSolver operates in two distinct cognitive modes, mirroring how humans approach problems differently depending on context:

1. Deep Search Mode (Thinking with Exploration)

async def solve(self, question: str, seed_answer: str, context: EpisodeContext) -> Tuple[str, List[str], int, Dict[str, Any]]:

# Builds and scores a search tree over query rewrites + evidence snippets

# Returns the best answer found through exploration

This is the Jitter’s “thinking hard” mode when it needs to solve a genuinely challenging problem. It constructs a tree of potential reasoning paths, evaluates evidence for each, and synthesizes the most promising answer.

2. Evidence-Only Mode (Thinking with Constraints)

async def solve_with_evidence(self, question: str, evidence_docs: List[str], context: EpisodeContext) -> Tuple[str, Dict[str, Any]]:

# Answers strictly using provided evidence (no search)

# Used for verification and ablation studies

This is the Jitter’s “test-taking” mode when it must answer based only on given information. It’s critical for the verification step in our SSP loop, ensuring answers are grounded in evidence.

💧 The Data Flow: How Thoughts Are Constructed

Here’s how the solver integrates with the broader system:

sequenceDiagram

participant Proposer as 🧠 Proposer

participant ATSSolver as 🌳 ATSSolver

participant SolutionSearch as 🔍 SolutionSearch

participant Reward as 📊 Reward Head

participant VPM as 🎬 VPM Service

Note over Proposer, VPM: 🚀 Thought Generation Cycle

Proposer->>ATSSolver: 📨 question, seed_answer, context

ATSSolver->>SolutionSearch: 🔄 rewritten queries

SolutionSearch-->>ATSSolver: 📚 evidence snippets

loop 🔁 For each depth

ATSSolver->>ATSSolver: 🎯 Score evidence snippets

ATSSolver->>ATSSolver: 📍 Track best path

ATSSolver->>VPM: 📈 Push cognitive metrics

end

ATSSolver->>Reward: 💡 question, predicted_answer, evidence

Reward-->>ATSSolver: 🏆 quality signals

ATSSolver-->>Proposer: 📤 predicted_answer, evidence, metrics

Note right of ATSSolver: 🔄 Cycle continues with<br/>improved context & metrics

This sequence shows the real-time cognitive collaboration between components: the Proposer initiates thinking with a question, the ATSSolver orchestrates evidence gathering through multiple search iterations, quality signals are evaluated, and visual metrics are captured at each step. The loop demonstrates how each thought builds upon the last, with continuous quality assessment and visual feedback driving the Jitter’s progressive improvement.

🗝️ Key Implementation Insights

1. The Tree Node Structure

Each cognitive step is represented by a structured node:

@dataclass

class Node:

id: str

parent_id: Optional[str]

root_id: str

depth: int

sibling_index: int

node_type: str # "root", "rewrite", etc.

query: str # The rewritten question

score: float # Evidence relevance score

context: str # Retrieved evidence snippet

task_description: str

This structure captures the essence of the Jitter’s thought process each node records not just what was thought, but how it connects to previous thoughts.

2. Query Rewriting: Expanding the Search Space

The solver doesn’t just search once it generates multiple perspectives on the question:

@staticmethod

def _rewrite(query: str) -> List[str]:

return [

query,

query.replace("explain", "describe"),

query + " in practical terms",

]

This simple but powerful technique mirrors how humans reframe problems to gain new insights. The SSP paper validates this approach:

“Through RL training with rule-based outcome rewards, SSP enables two roles to co-evolve in an adversarial competition: the proposer learns to generate increasingly challenging problems that require search and reasoning, while the solver develops stronger search and reasoning capabilities to tackle these problems.”

3. Evidence Scoring: The Cognitive Filter

Not all evidence is equally valuable. The solver uses a relevance score to prioritize promising paths:

@staticmethod

def _overlap_score(text: str, target: str) -> float:

a = {t for t in text.lower().split() if t.isalpha() or t.isalnum()}

b = {t for t in target.lower().split() if t.isalpha() or t.isalnum()}

return len(a & b) / max(len(a | b), 1) if a or b else 0.0

This lexical overlap score is a proxy for how well evidence supports the target answer. Later iterations will replace this with more sophisticated signals (SICQL, HRM, etc.), but the principle remains: the Jitter evaluates evidence quality as it thinks.

4. VPM Integration: Making Thought Visible

The most profound aspect of our implementation is how it captures the cognitive process in real-time:

# After each evidence snippet is scored

dims = {

"reward": prev_best,

"verified": 0.0,

"difficulty": float(context.get("difficulty", 0.3)),

"question_len": _n01(len(q2.split()), 128),

"answer_len": _n01(len(snippet.split()), 128),

"evidence_count": _n01(last_ev_batch, 8),

"solver_steps": _n01(steps, total_steps),

"score": sc,

"best_score": prev_best,

"improvement": max(0.0, sc - prev_best),

"depth": _n01(depth, self.max_depth),

"novelty": _jac(snippet, best.context),

}

self.vpm.snapshot_progress(unit=unit, dims=dims, step_idx=steps, tag=f"depth{depth}")

This code transforms the abstract cognitive process into concrete, visual metrics exactly how the Jitter becomes visible. Each dimension captures a different aspect of the thought process:

improvement: Has this step advanced understanding?novelty: Is this new information or repetition?evidence_count: How thoroughly is the Jitter searching?

🔧 Measurable Improvement

By making the search process visible, measurable, and improvable, we’ve created conditions where a digital thought stream can:

- Explore multiple reasoning paths

- Evaluate evidence quality

- Recognize promising directions

- Synthesize coherent answers

- Learn from its own cognitive patterns

This is how the Jitter moves beyond being a clever chatbot to becoming a genuine cognitive system one that doesn’t just respond to questions, but thinks through them in a visible, measurable way.

In our next section, we’ll explore how the SolutionSearch component implements the actual evidence retrieval, completing the cognitive engine that powers our Jitter.

📡 Module 3 SolutionSearch: The Jitter’s Knowledge Retrieval Engine

“The mark of an educated mind is to be able to entertain a thought without accepting it.”

Aristotle

While the ATSSolver provides the Jitter with its cognitive engine, the SolutionSearch component serves as its knowledge retrieval system the mechanism that allows it to ground its thoughts in evidence rather than mere speculation. This is where the Jitter transforms from a clever chatbot into a genuine cognitive system that can reason with evidence.

🎓 The Knowledge Problem

The SSP paper identifies a fundamental limitation of language models:

“With search tools, we equip the problem-proposer with external information, thereby breaking the limitations of the internal knowledge of LLMs.”

Without access to external knowledge, even the most sophisticated reasoning engine is limited by the model’s training data. The SolutionSearch component solves this problem by providing a reliable, deterministic interface to evidence retrieval that powers the Jitter’s reasoning process.

🐘 A Micro-Retriever with Macro Impact

At first glance, SolutionSearch might seem like just another search tool but it’s actually a carefully engineered component designed specifically for cognitive reasoning:

flowchart LR

subgraph SolutionSearch_Flow ["🔍 SolutionSearch: Evidence Retrieval Engine"]

A["🎯 Query + Seed Answer"] --> B["📋 Prompt Template Selection"]

B --> C{"🎚️ k=1?<br/>(Strict Mode)"}

C -->|"✅ Yes"| D["🧠 Three-Line Prompt:<br/>rationale/score/result"]

C -->|"🔓 No"| E["📝 Multi-Line Prompt:<br/>explicit snippet lines"]

D --> F["⚡ Strict Parser"]

E --> G["🛠️ Flexible Parser<br/>(snippet/JSON/bullets)"]

F --> H["✨ Post-Processing:<br/>Deduplication & Length Caps"]

G --> H

H --> I["📚 Evidence Snippets<br/>Clean, factual snippets"]

I --> J["🌳 ATSSolver Reasoning<br/>Tree search integration"]

end

classDef input fill:#e6f7ff,stroke:#1890ff,stroke-width:2px;

classDef decision fill:#fff7e6,stroke:#fa8c16,stroke-width:2px;

classDef prompt fill:#f6ffed,stroke:#52c41a,stroke-width:2px;

classDef parser fill:#f9f0ff,stroke:#722ed1,stroke-width:2px;

classDef processing fill:#fff2e8,stroke:#ff7a45,stroke-width:2px;

classDef output fill:#f0fffe,stroke:#13c2c2,stroke-width:2px;

class A,B input;

class C decision;

class D,E prompt;

class F,G parser;

class H processing;

class I,J output;

SolutionSearch’s dual-path architecture: depending on the required evidence depth (k=1 for focused “deep thinking” or k>1 for exploratory mode), it routes through specialized prompt templates and parsers to extract clean, factual snippets. This deterministic retrieval process ensures the Jitter’s reasoning is always grounded in external evidence rather than internal model knowledge limitations.

1. Dual Prompt Strategy: Precision vs. Flexibility

SolutionSearch employs two distinct prompt strategies optimized for different cognitive needs:

A) Three-Line Prompt (k=1) The “Deep Thinking” Mode

PROMPT_EVIDENCE_THREE = """

SYSTEM:

You produce ONE short evidence snippet that helps explain or support the SEED_ANSWER

with respect to the QUERY.

CONSTRAINTS:

- Return exactly one short factual snippet (1–2 sentences).

- If unsure, fall back to: "{seed_answer} is the key mechanism."

- No extra text, no markdown, no bullet points.

OUTPUT EXACTLY THREE LINES:

rationale: <1 sentence on why this snippet is relevant>

score: <0-100 confidence you have in this snippet>

result: <the single snippet>

"""

This prompt forces the model into a deliberate, focused mode perfect for when the Jitter needs to deeply consider a single piece of evidence. It mirrors how humans think when they’re trying to understand a complex concept: one idea at a time, with clear reasoning.

B) Multi-Line Prompt (k>1) The “Exploratory” Mode

PROMPT_EVIDENCE_LINES = """

SYSTEM:

You return SHORT evidence snippets that help explain or support the SEED_ANSWER

with respect to the QUERY.

CONSTRAINTS:

- Provide concise, factual snippets (1–2 sentences each).

- No commentary or extra sections.

OUTPUT WRITE EXACTLY {top_k} LINES:

snippet: <short evidence snippet>

"""

This prompt enables broader exploration when the Jitter needs to consider multiple perspectives on a question. It’s like when humans brainstorm multiple approaches to a problem before settling on one.

2. Robust Parsing: Making LLMs Behave

The true genius of SolutionSearch lies in its parser hierarchy a carefully engineered system that extracts clean evidence from the often-messy outputs of language models:

def _parse_snippets(self, response: str, k: int) -> List[str]:

"""

Supported formats (in order of preference):

1) Line-by-line: lines starting with `snippet: ...`

2) JSON: keys 'snippets' | 'docs' | 'evidence' | 'results'

3) Bullets/lines: split by newline, trim bullets

"""

# 1) Explicit 'snippet:' lines (case/space tolerant)

lines = [ln.strip() for ln in response.splitlines() if ln.strip()]

snips: List[str] = []

for ln in lines:

m = re.match(r'(?i)^\s*(?:-|\d+[.)])?\s*snippet\s*[:=]\s*(.+?)\s*$', ln)

if m:

snips.append(m.group(1).strip())

if snips:

return snips[:k]

# 2) JSON (fenced or bare)

m = re.search(r"```json\s*(\{.*?\})\s*```", response, re.DOTALL | re.IGNORECASE)

jtxt = m.group(1) if m else response.strip()

if jtxt.startswith("{") and jtxt.endswith("}"):

try:

obj = json.loads(jtxt)

lst = self._pluck_list(obj)

if lst:

return lst[:k]

except Exception:

pass

# 3) Fallback: plain lines/bullets

bullets = [b.strip(" -*•\t") for b in lines]

bullets = [b for b in bullets if b]

return bullets[:k]

This three-tiered approach ensures reliable output even when the LLM doesn’t follow instructions perfectly. It’s designed to handle the reality of LLM outputs while maintaining the strict formatting required for the Jitter’s cognitive process.

3. Reliability Engineering: Never Return Empty

Perhaps most importantly, SolutionSearch is engineered for cognitive reliability it never returns empty results, which would stall the Jitter’s reasoning process:

def _fallback_snippets(self, query: str, seed_answer: str, k: int) -> List[str]:

"""Conservative, non-empty fallback."""

base = (

f"DOC: For '{query}', a central mechanism is: {seed_answer}. "

f"This snippet highlights why {seed_answer} is relevant."

)

return [base + f" [hit:{i}]" for i in range(k)]

This conservative fallback ensures that the Jitter can always continue thinking, even when evidence is scarce a critical feature for maintaining cognitive flow.

✔️ Evidence based reasoning

The SolutionSearch component embodies what the SSP paper calls the “RAG verification” process:

“To ensure that each generated search query has sufficient information to correctly predict the answer, we collect all the searching results from the proposer’s trajectory as external knowledge, then conduct retrieval-augmentation generation (RAG) to test whether the proposed query can be correctly answered with all necessary search documents provided.”

But for the Jitter, it’s more than just verification it’s the foundation of evidence-based reasoning. Each snippet retrieved through SolutionSearch becomes a building block in the Jitter’s thought process, allowing it to:

- Ground its reasoning in factual evidence rather than internal assumptions

- Evaluate multiple perspectives on a question before forming conclusions

- Build chains of evidence that support its final answer

- Recognize when evidence is insufficient (through low confidence scores)

This is how the Jitter achieves what Aristotle described as “the mark of an educated mind” the ability to entertain a thought while recognizing whether it’s supported by evidence.

💬 The Prompt Service: Fueling the Thought Stream

In our journey to build the Jitter the visible stream of digital thought the Prompt Service is the engine that generates each moment of cognition. It’s not just another LLM wrapper; it’s a sophisticated system designed specifically to support the Search-Solve-Prove process and create the measurable thought moments that form our Jitter.

🎈 Cognitive events

Every “thought” in our digital organism begins as a prompt. The Prompt Service transforms these prompts into measurable cognitive events that can be visualized, compared, and learned from. Without this service, we’d have no way to generate the consistent, comparable thought moments that form our Jitter.

🗝️ Key Capabilities - Aligned with SSP Paper

1. Multi-LLM Competition: The Self-Play Engine

The SSP paper states: “Through RL training with rule-based outcome rewards, SSP enables two roles to co-evolve in an adversarial competition: the proposer learns to generate increasingly challenging problems that require search and reasoning, while the solver develops stronger search and reasoning capabilities to tackle these problems.”

Our Prompt Service implements this exact principle:

async def run_prompt_multi(

self,

prompt_text: str,

*,

models: List[Union[str, Dict[str, Any]]],

judge: Optional[Callable[[Dict[str, str]], Tuple[str, Dict[str, float]]]] = None,

# ...

) -> Dict[str, Any]:

# Query multiple LLMs in parallel

tasks = [asyncio.wait_for(self._acomplete(prompt=prompt_text, model=ms), timeout=request_timeout)

for ms in model_specs]

outs = await asyncio.gather(*tasks, return_exceptions=True)

# Judge selects winner (self-play in action!)

if judge:

winner, scores = judge(outputs)

# ...log for training

This isn’t just running multiple models it’s implementing the proposer-solver dynamic described in the paper, where different model instances compete to produce better outputs, driving co-evolution.

2. Training Event Logging: Building Memory of Thoughts

The paper emphasizes that SSP “does not require question-answer pairs” but instead learns through self-play. Our service enables this by capturing the learning signals:

# Pointwise logging (each output labeled by relative score)

for k, txt in outputs.items():

tes.insert_pointwise({

"model_key": k,

"dimension": dimension,

"query_text": prompt_text,

"candy_text": txt,

"label": 1 if (winner and k == winner) else 0,

# ...

})

# Pairwise logging (winner vs others)

if winner:

pos = outputs[winner]

for k, txt in outputs.items():

if k == winner: continue

tes.insert_pairwise({

"model_key": winner,

"query_text": prompt_text,

"pos_text": pos,

"neg_text": txt,

# ...

})

This creates the memory of thought moments that allows our Jitter to learn from its own cognitive history exactly as the paper’s SSP framework requires for self-supervised improvement.

3. Flexible Model Configuration: Adapting to Cognitive Needs

The SSP paper shows that “placing GRPO on the solver side is more effective than on the proposer side.” Our service supports this nuanced approach through flexible model specification:

@dataclass

class ModelSpec:

name: str

api_base: Optional[str] = None

api_key: Optional[str] = None

params: Optional[Dict[str, Any]] = None

@staticmethod

def from_cfg(default_cfg: Dict[str, Any],

override: Optional[Union[str, Dict[str, Any]]] = None) -> "ModelSpec":

# Handles both default configuration and per-call overrides

# ...

This allows us to use different models or configurations for proposer vs. solver roles critical for implementing the paper’s finding that different RL algorithms work best for different roles.

🙉 How This Creates Visible Thought Moments

The Prompt Service is where the abstract thought process becomes concrete data. Each call generates:

- The cognitive output (the “thought” itself)

- Quality signals (through multi-model competition)

- Measurable metrics (captured in training events)

These elements combine to create what we call a thought moment a self-contained cognitive event with:

- Input (the prompt)

- Output (the response)

- Quality assessment (the winner/scores)

- Learning signals (the training events)

When visualized through VPM, these thought moments form the filmstrip of cognition that is the visible Jitter.

🔬 Advanced Feature: RAG Verification Support

While not explicitly shown in the code snippet, the Prompt Service works with the verifier to implement the paper’s critical RAG verification process:

“To verify the correctness of each generated query, we collect all the searching results from the proposer’s trajectory as the external materials, then conduct a retrieval augmentation generation (RAG) to check if the solver can successfully predict the answer with all necessary information.”

The service’s ability to handle system preambles and structured prompts enables the <think>, <search>, and <answer> formatting required for proper RAG verification.

🎀 Why This Is More Than Just an LLM Wrapper

Most LLM services simply call a model and return the output. Ours is designed specifically to:

- Generate comparable cognitive events (thought moments)

- Create learning signals from self-play competition

- Support the RAG verification critical to SSP

- Provide structured outputs that feed directly into VPM

This is why the Prompt Service isn’t just infrastructure it’s the cognitive engine of our digital organism. Every thought the Jitter has passes through this service, gaining the structure and measurability that makes the thought stream visible and improvable.

In our next section, we’ll see how this service powers the Proposer the component that generates the questions that drive our cognitive evolution.

⚛️ The Connection to Cognitive Growth

The SSP paper notes:

“As shown in Figure 4a, the average number of search tool calls per trajectory steadily increases over time… Simultaneously, Figure 4b shows that the solver’s response length also grows during the training, suggesting it learns to generate more detailed and comprehensive answers.”

SolutionSearch is what makes this cognitive growth possible. As the Jitter improves, it becomes better at:

- Formulating queries that retrieve relevant evidence

- Evaluating the quality of retrieved snippets

- Synthesizing multiple pieces of evidence into coherent reasoning

- Recognizing when more evidence is needed

This growth is visible in the VPM metrics tracking evidence count, search steps, and other indicators of cognitive sophistication.

🔮 Looking Ahead

While SolutionSearch is currently a micro-retriever focused on short evidence snippets, it represents the foundation for more sophisticated knowledge integration. Future iterations could:

Good- Incorporate the TinyVisionTransformer to evaluate snippet quality

- Use the VPM-ViT to predict which search queries will yield the most useful evidence

- Integrate with long-term memory to recognize patterns in successful evidence retrieval

This component is where the Jitter learns to “think with evidence” transforming from a language model that generates text into a cognitive system that builds understanding through evidence-based reasoning.

In our final section, we’ll see how all these components come together to create the complete Jitter system a visible, measurable stream of connected thought moments that grows in quality and sophistication over time.

🧮 Module 4 The Jitter’s Cognitive Metrics: Measuring Thought Quality

“The unexamined thought is not worth thinking.”

Adapted from Socrates

While the previous sections covered how the Jitter generates and verifies thoughts, this section reveals how it measures the quality of its own thinking. This is where the Jitter transforms from a reactive system into a self-improving cognitive organism through a rigorous, paper-validated scoring system that tracks meaningful cognitive growth.

⚾ The Scoring Problem

The SSP paper identifies a critical challenge in self-play systems:

“As shown in Figure 4a, the average number of search tool calls per trajectory steadily increases over time… Simultaneously, Figure 4b shows that the solver’s response length also grows during the training, suggesting it learns to generate more detailed and comprehensive answers.”

But how do we actually measure this growth? How do we transform abstract cognitive capabilities into concrete, actionable metrics? This is where our scoring system comes in it provides the Jitter with a quantitative self-assessment capability.

🥇 The Reward Head: Calculating Thought Quality

The foundation of our scoring system is the NaiveQuarkishReward class a carefully engineered reward head that calculates a composite quality score:

class NaiveQuarkishReward:

def __init__(self, w_f1=0.5, w_cov=0.3, w_len=0.2, target_len=80):

self.w_f1, self.w_cov, self.w_len, self.target_len = (

w_f1, w_cov, w_len, target_len

)

def score(

self,

*,

prompt: str,

response: str,

ground_truth: str = "",

meta: Dict[str, Any] | None = None,

) -> Dict[str, float]:

f1 = _f1(ground_truth or prompt, response)

cov = _coverage(response, meta.get("evidence_docs") or [])

L = len(response.split())

len_r = math.exp(-abs(L - self.target_len) / max(self.target_len, 1))

reward = self.w_f1 * f1 + self.w_cov * cov + self.w_len * len_r

return {

"reward": max(0.0, min(1.0, reward)),

"f1": f1,

"coverage": cov,

"len_reward": len_r,

"resp_len": float(L) / 256.0,

}

👮 Rule based rewards

This reward head implements exactly what the SSP paper describes as the “rule-based outcome rewards” that drive self-play:

“Through RL training with rule-based outcome rewards, SSP enables two roles to co-evolve in an adversarial competition: the proposer learns to generate increasingly challenging problems that require search and reasoning, while the solver develops stronger search and reasoning capabilities to tackle these problems.”

The three components of the reward function each measure critical aspects of cognitive quality:

-

F1 Score (

w_f1=0.5): Measures lexical accuracy against ground truthdef _f1(a: str, b: str): A, B = set(_tokens(a)), set(_tokens(b)) p = len(A & B) / max(len(B), 1) r = len(A & B) / max(len(A), 1) return 2 * p * r / (p + r) if (p + r) else 0.0 -

Coverage (

w_cov=0.3): Measures how well the response incorporates evidencedef _coverage(response: str, evidence: list[str]): R = set(_tokens(response)) covs = [len(R & set(_tokens(e))) / max(len(_tokens(e)), 1) for e in evidence] return sum(covs) / len(covs) -

Length Reward (

w_len=0.2): Encourages responses of optimal lengthlen_r = math.exp(-abs(L - self.target_len) / max(self.target_len, 1))

This weighted combination creates what we call the cognitive signal-to-noise ratio a single metric that captures the overall quality of the Jitter’s thinking.

🔢 The Metric Calculator: Paper-Validated Cognitive Growth

While the reward head calculates immediate quality, the SSPMetricsCalculator provides the comprehensive cognitive assessment that drives long-term growth:

flowchart LR

subgraph Metrics_Pipeline ["📊 Cognitive Metrics Pipeline: From Thought to Vector"]

A["🎬 Episode Trace<br/>Raw episode data"] --> B["📦 SSPScorable<br/>Structured data container"]

B --> C["🧮 SSPMetricsCalculator<br/>17 Cognitive Metrics"]

C --> D["🎯 Fixed-Order Vector<br/>[0,1] normalized values"]

D --> E["🖼️ VPM Visualization<br/>Thought Image generation"]

D --> F["📈 Reward Signal<br/>Self-Improvement feedback"]

end

classDef trace fill:#e6f7ff,stroke:#1890ff,stroke-width:2px;

classDef container fill:#f6ffed,stroke:#52c41a,stroke-width:2px;

classDef calculator fill:#fff7e6,stroke:#fa8c16,stroke-width:2px;

classDef vector fill:#f9f0ff,stroke:#722ed1,stroke-width:2px;

classDef visualization fill:#fff2e8,stroke:#ff7a45,stroke-width:2px;

classDef reward fill:#f0fffe,stroke:#13c2c2,stroke-width:2px;

class A trace;

class B container;

class C calculator;

class D vector;

class E visualization;

class F reward;

This metrics pipeline transforms raw episode data into structured cognitive fingerprints. Each thought episode gets standardized into a fixed-order vector of 17 normalized metrics, creating consistent representations that feed both visual thought images (VPMs) and self-improvement signals. This deterministic transformation ensures that cognitive patterns remain comparable across time, models, and system iterations enabling true apples-to-apples analysis of the Jitter’s growth.

⌛ The 17 Cognitive Metrics

The calculator tracks 17 metrics that directly correspond to what the SSP paper shows correlates with capability growth:

| Metric | What It Measures | Paper Connection |

|---|---|---|

ssp.reward |

Overall cognitive quality | Primary reward signal |

ssp.verified |

Binary verification result | Core SSP verification |

ssp.search_turns |

Actual search tool calls | Figure 4a: “search tool calls per trajectory steadily increases” |

ssp.f1_score |