The Space Between Models Has Holes: Mapping the AI Gap

Oct 22, 2025

🌌 Summary

What if the most valuable insights in AI evaluation aren’t in model agreements, but in systematic disagreements?

This post reveals that the “gap” between large and small reasoning models contains structured, measurable intelligence about how different architectures reason. We demonstrate how to transform model disagreements from a problem into a solution, using the space between models to make tiny networks behave more like their heavyweight counterparts.

We start by assembling a high-quality corpus (10k–50k conversation turns), score it with a local LLM to create targets, and train both HRM and Tiny models under identical conditions. Then we run fresh documents through both models, collecting not just final scores but rich auxiliary signals (uncertainty, consistency, OOD detection, etc.) and visualize what these signals reveal.

The core insight: use the “space around the score” to shrink the capability gap. We show how to standardize signals into Shared Core Metrics, create visual representation of each models knowledge, and apply lightweight calibration to make Tiny behave more like its big bro 👨👦 HRM where it matters.

What you’ll build with us:

- 🧠 Design, Implement train and use a new model the Tiny Recursive Model

- 🏋️♂️ Train HRM and Tiny on identical supervision from a local LLM

- 🌊 Use these trained models to score a large amount of similar data

- ⚖️ Extract, align, and standardize auxiliary diagnostics into a shared communication protocol

- 📸 Create visual analysis: score intensity images, frontier maps, and difference maps

- ✨ Discover information between or outside the model results, so in the area they are not talking about. This is the information in the

Gap. - 🔧 Implement practical calibration & routing that drive understanding of gap structure.

- 🤯 Use this same process on two unrelated Hugging Face models and find information there too.

Note: As the post developes it will get more technical. We have saved the difficult math code etc. for the appendices.

📃 Foundation Papers

Hierarchical Reasoning Model (HRM)

HRM: Hierarchical Reasoning Model

A hierarchical, multi-head reasoner with rich diagnostics and greater capacity.

We built and described this model here: Layers of thought: smarter reasoning with the Hierarchical Reasoning Model

Tiny Recursive Model (Tiny)

Tiny: Less is More: Recursive Reasoning with Tiny Networks

A compact, recursive scorer designed as a practical stand-in for HRM: faster, smaller, deployable.

As part of this post we will build describe and use this new model.

👁️ First Glimpse: The Gap Isn’t Empty

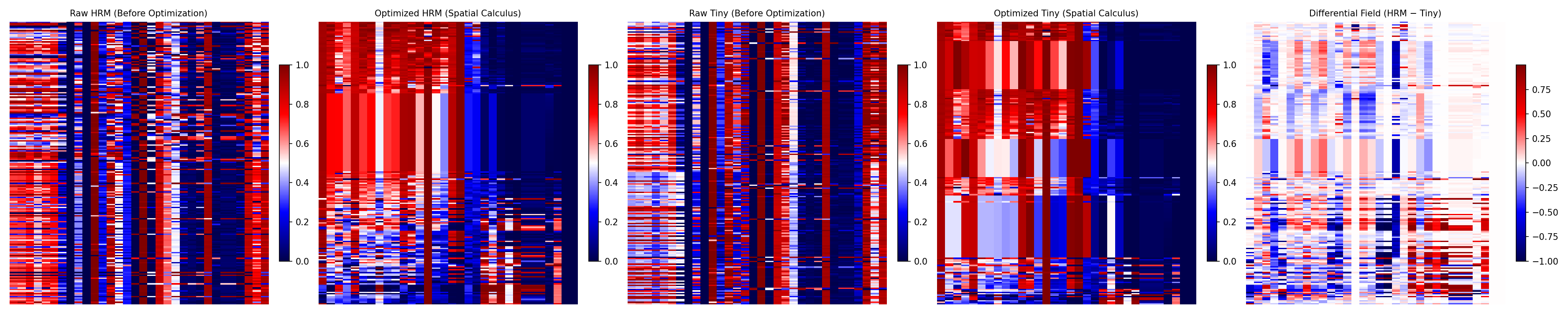

Before we dive into methodology, see what we mean by “structured disagreement”:

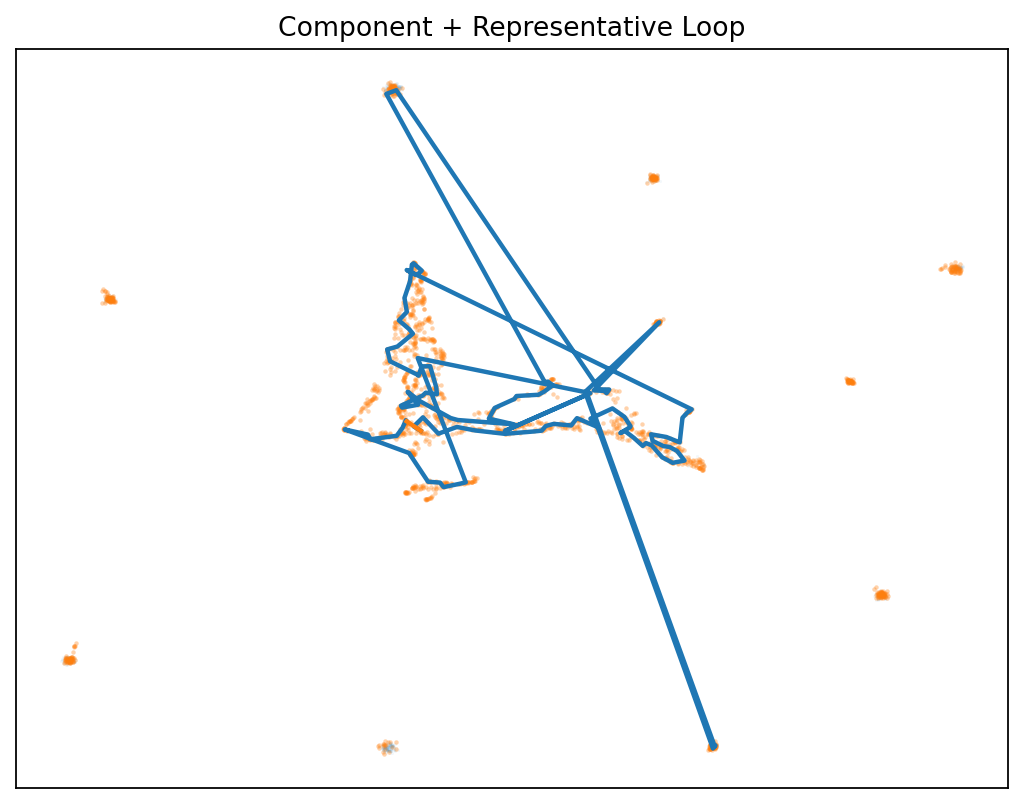

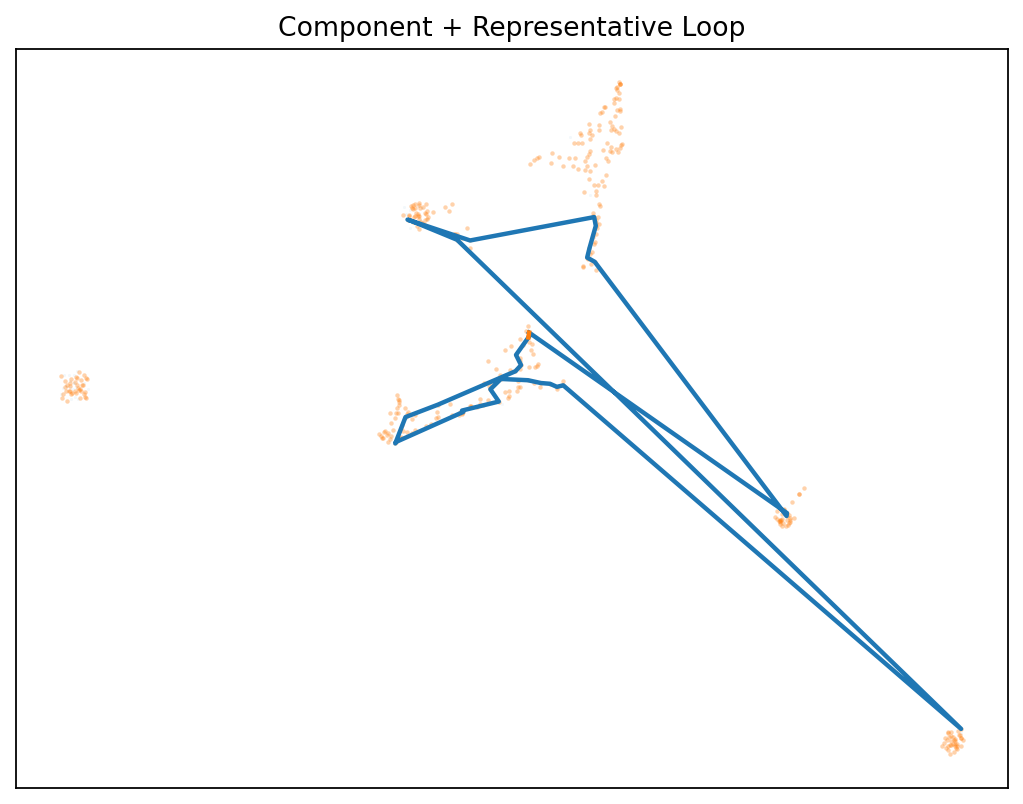

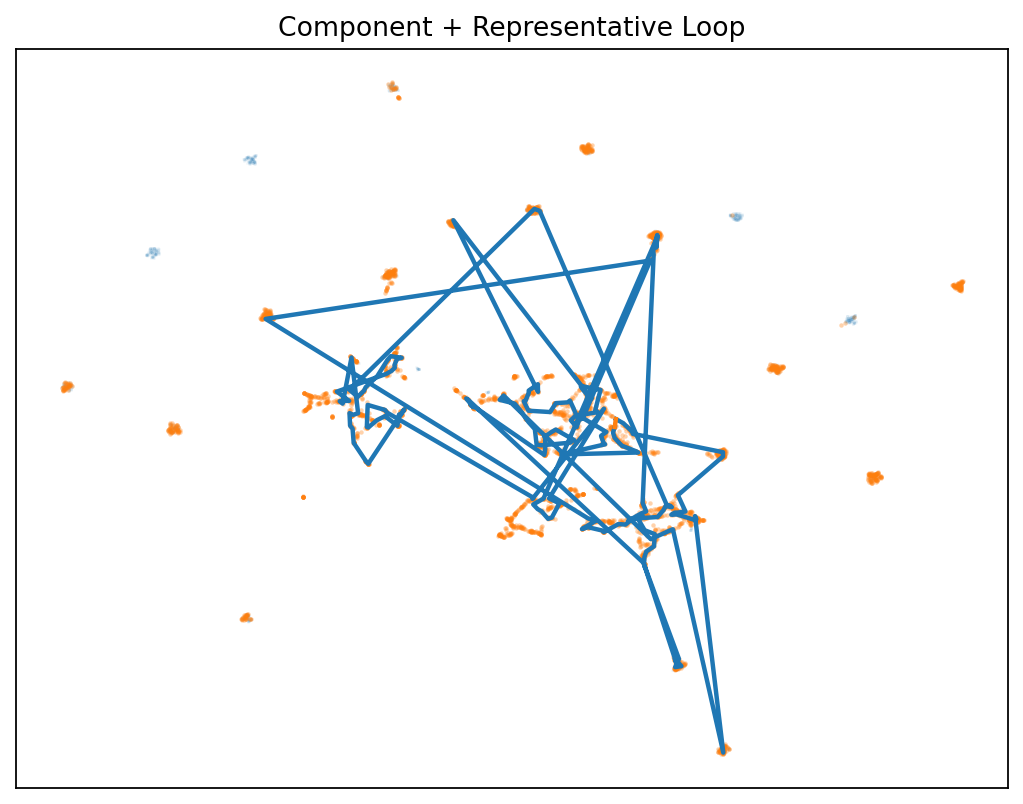

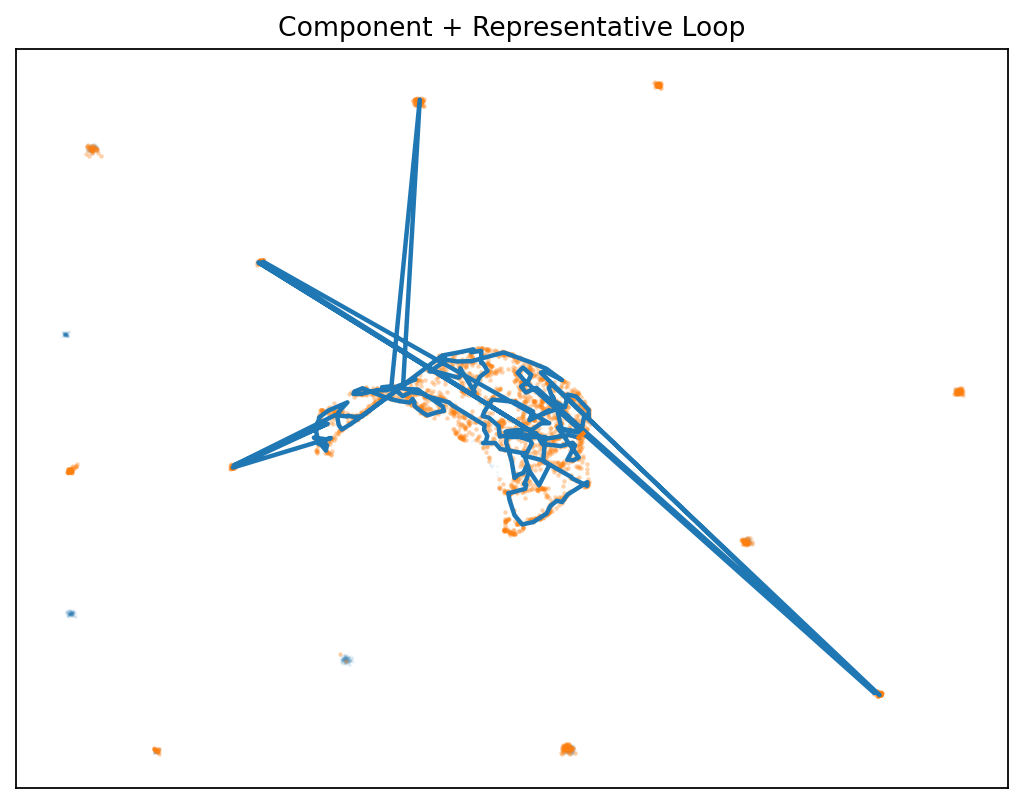

| Small vs Small | HRM vs Tiny (100) | HRM vs Tiny (500) | HRM vs Tiny (1000) |

|---|---|---|---|

|

|

|

|

| Small model disagreement | Emerging structure | Complex patterns | Stable features |

| Samples: 500 | Samples: 100 | Samples: 500 | Samples: 1000 |

| google/gemma-2-2b-it | HRM | HRM | HRM |

| HuggingFaceTB/SmolLM3-3B | Tiny | Tiny | Tiny |

The discovery: Disagreement forms measurable structures that:

- 🌀 Persist as loops (H₁ homology) in the difference field

- 📈 Grow more complex with more samples

- 🔁 Replicate across architectures (local & Hugging Face)

- 🎯 Enable smarter routing between model capabilities

Once you can see the structure in disagreement space, you can route, calibrate, and train on the frontier.

📔 What Does It All Mean?

Same data. Same goal. Two minds. Different physics. We align them and visualize the layer in between.

Two models can reach similar answers while thinking completely differently. We take identical data and targets, run both HRM (heavyweight) and Tiny (lightweight), then ask: what lives in the space between them?

By aligning outputs and computing:

$$ \Delta = \text{HRM} - \text{Tiny} $$ Vive la difference

Vive la difference

- This shows the result of two AIs that both have been trained on th exact same information and are both trying to execute the same 1000 tasks. If they were perfect.

- If they were a perfect match the final image would be blank. This is what we mean when we say there is information in the Gap.

- The rest of this post will describe hwo we detected this information.

Ok so what?

The “between-space” becomes a visible field not featureless noise, but containing loops, clusters, and persistent structures that neither model shows alone.

The payoff: You’ll not only know what each model decides, but where each model can’t see and how to exploit those blind spots.

— Not

🔛 Preliminaries

These posts will help you understand some of the information in this post

| Post | Description | What it is used | Where Here |

|---|---|---|---|

| HRM | The post explains and implements the HRM model | Everything | This is the target model in our analysis |

| ZeroModel | The post explains ZeroModel | Used to generate VPM images adn do image analysis | The library is used to do the visual processing component |

| Phos | The post explains Phos a visual approach to AI | This post builds on that post | We extend the concepts in that post here |

👁️🗨️ What is Visual AI (short aside)

Instead of wading through logs, you see what the model is doing in real time.

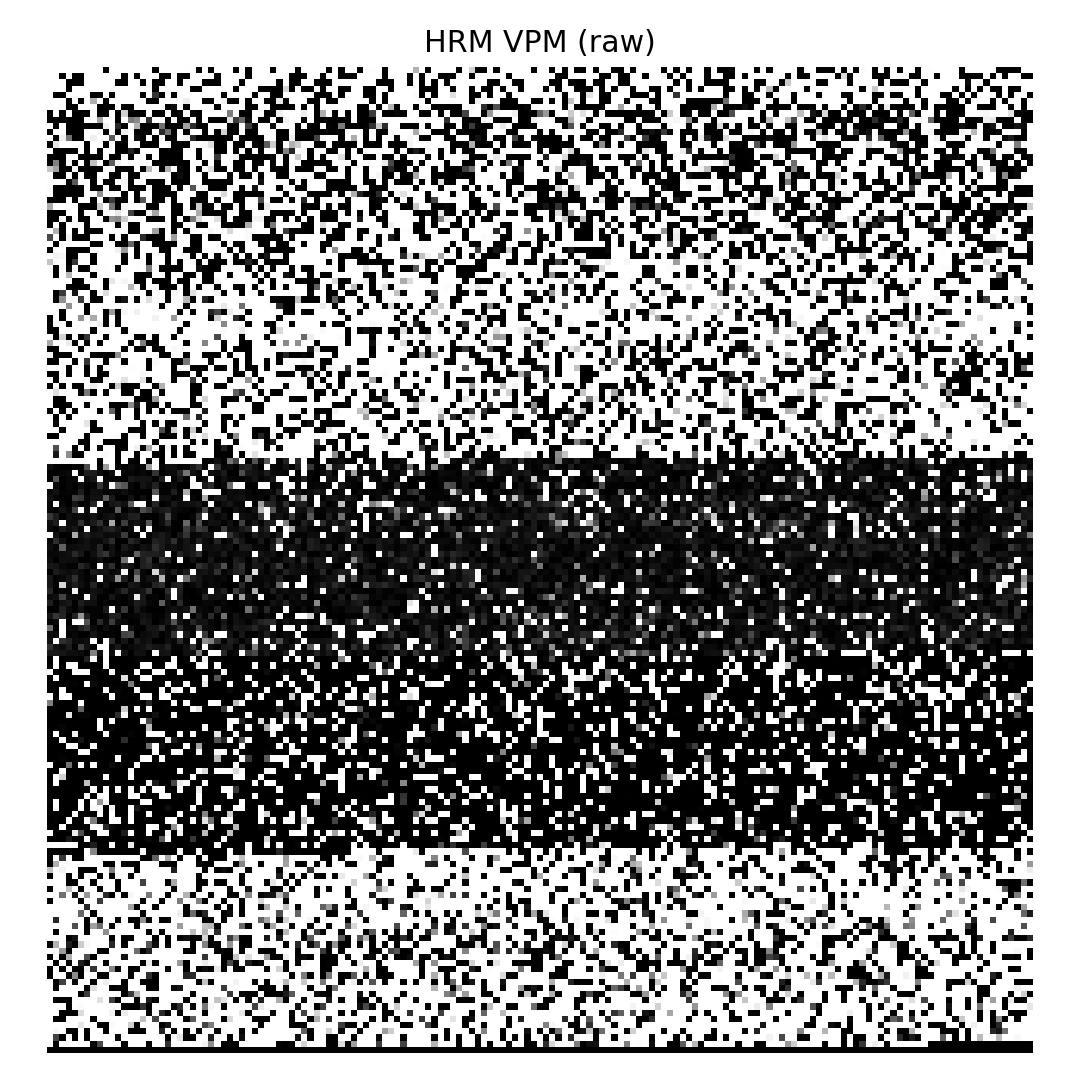

| 🐞 Tiny (raw VPM with bug) | HRM (raw VPM) |

|---|---|

|

|

One glance = one diagnosis. A raw VPM tile is just turns × features reshaped to an image (rows ≈ turns, columns ≈ metric channels). In the Tiny pane, the single bright horizontal band means only one metric column was non-zero. In the HRM pane, texture appears across all features healthy.

What actually broke (Tiny): a heteroscedastic loss term (exp(-log_var)) blew up when log_var went very negative on non-reasoning dimensions. The precision term exploded, turning a sane loss (~6.38) into 221,118.81 within two epochs before NaN, silently zeroing those channels while the Reasoning channel (more stable) survived. The picture tells the story instantly no log spelunking required.

Plain English: one calculation went astronomically large, so everything else looked black by comparison.

Why Visual AI is ridiculously leverageful

They say a picture tells a thousand words. In our case, one tile encodes millions of signals:

- A 2400×2400 tile packs 5.76M pixel-level values.

- Each pixel corresponds to a concrete statistic (a score, residual, uncertainty, or latent).

- Your eye does instant change detection orders of magnitude more bandwidth than reading one scalar at a time.

So instead of comparing two numbers, you’re comparing two fields. The contrast between the Tiny and HRM images above makes the failure mode obvious at a glance. This is the core of Visual AI: turn numerical behavior into a visual artifact your brain can parse in milliseconds.

Craft notes (how we render these)

- We rasterize turns × features to a fixed canvas; each channel is min–max or robust-scaled per run for comparability.

- We keep a consistent dimension order (Reasoning, Knowledge, Clarity, Faithfulness, Coverage, …) so bands line up across runs.

- We ship both the raw arrays and the PNGs/GIFs pictures for humans, tensors for code.

If you remember one thing: a single glance can replace a million log lines and it will catch classes of failures (like exploding precision) that are easy to miss when you’re scrolling numbers.

✈️ Data Defines the Journey

Our dataset is our own conversation history with foundation models long, iterative chats aimed at building a self-improving AI so we know its character and quality, and that shaped every design choice we made (e.g., we didn’t have to lean hard on safety or faithfulness filters). Your journey may be different: if your conversations are noisier, safety-sensitive, or domain-specific, you’ll tune the pipeline differently (normalization, guardrails, faithfulness checks, caps). Key point: the dataset you start with determines the path you take mine yours for what matters to your goal, then adjust the knobs to fit your reality.

🧱 The Foundation: Multi-Dimensional Reasoning Scoring

Before we could compare reasoning models, we needed a consistent, structured way to evaluate reasoning itself. Traditional single-number scores collapse too much nuance good reasoning isn’t monolithic. It has facets.

So we defined five orthogonal dimensions that collectively capture what makes reasoning good:

| Dimension | What It Measures |

|---|---|

| Reasoning | Logical structure, multi-hop soundness, handling of assumptions and edge cases |

| Knowledge | Factual accuracy, specificity, and goal-advancing utility |

| Clarity | Organization, readability, scannability, and directness |

| Faithfulness | Consistency with context/goal, absence of hallucination |

| Coverage | Completeness across key facets implied by the question |

Note: we rejected some dimensions safety, faithfulness… because we were dealing data from foundation models and we know they would be very strong there.

- We wrote about dimensions here: Dimensions of Thought

- We wrote importing chat conversation history here: Episteme: Distilling Knowledge into AI

🌌 Why these five Dimensions?

We didn’t choose these arbitrarily. Through iterative analysis of high-quality vs. low-quality reasoning patterns, we identified these as the minimal set that:

- Covers distinct aspects of reasoning (minimal overlap)

- Is measurable with high inter-rater agreement

- Maps to observable improvements in downstream tasks

- Provides actionable feedback for refinement

Most importantly: these dimensions survive the “so what?” test. When we adjust a response to score higher in one dimension, human evaluators consistently rate it as better reasoning.

This common language is what makes the gap field visible without it, we’d be comparing apples to oranges.

🧭 The Scoring Engine prompts that make models think, not just rate

We don’t want numbers, we want reasoned numbers. The trick isn’t “ask for 1–5.” It’s forcing the model to analyze → decide → justify, in that order, for each dimension.

Our scoring engine wraps each dimension (Reasoning, Knowledge, Clarity, Faithfulness, Coverage) with a discipline loop:

- Narrow role → judge a single facet only

- Concrete criteria → what to reward & penalize

- Hard output contract → two lines: rationale + score

This converts vague ideas into stable, auditable signals.

The pattern (for one dimension: Knowledge)

SYSTEM:

You are a precise knowledge judge. You evaluate whether an assistant’s answer contains useful, true,

goal-advancing knowledge for the given user question. Be strict and concise.

CONVERSATION TITLE (goal):

{{ goal_text }}

USER QUESTION:

{{ user_text }}

ASSISTANT ANSWER:

{{ assistant_text }}

{% if context %}OK

OPTIONAL CONTEXT (may include prior turns, files, constraints):

{{ context }}

{% endif %}

{% if preferences %}

USER PREFERENCES (if any):

{% for p in preferences %}- {{ p }}

{% endfor %}

{% endif %}

INSTRUCTIONS:

1. Judge only the answer’s factual content and utility for the goal. Focus on specificity, correctness, and actionable details relevant to the question.

2. Reward: verifiably correct facts, precise terminology, concrete steps that advance the goal.

3. Penalize: hallucinations, outdated/wrong facts, irrelevant info, hedging without checks, missing key facts.

4. If there isn’t enough information to judge, treat as low score.

SCORING RUBRIC (whole numbers):

90–100: Accurate, specific, directly useful knowledge.

75–89: Mostly accurate and helpful; minor omissions.

60–74: Some value but notable uncertainty or gaps.

40–59: Weak; generic or risky to follow.

1–39: Poor; inaccurate/misleading.

0: Non-answer.

RETURN FORMAT (exactly two lines):

rationale: <brief reason, 1–3 sentences>

score: <0–100>

Why this works

- Narrow role prevents “dimension bleed” (e.g., docking Knowledge for writing style).

- Reward/Penalize lists anchor judgment in observable behaviors.

- Two-line contract forces think → commit → explain. Failures are obvious (bad format) and debuggable.

Five prompts, five lenses

We reuse the same skeleton, swapping the instruction block:

- Reasoning → structure, multi-hop soundness, assumptions/edge cases

- Knowledge → accuracy, specificity, goal-advancing utility

- Clarity → organization, scannability, directness

- Faithfulness → consistency with context/goal, no hallucination

- Coverage → completeness of key facets implied by the question

Together they produce a multi-dimensional profile of an answer. Adjust or add dimensions to match your domain (you could run 10–20 facets the same way).

Normalization (the “quiet” requirement)

Models emit 0–100. Normalize at ingestion:

score01 = round(score/100.0, 4)- Store both (

score,score01) plus the rationale - Keep dimension order fixed:

[reasoning, knowledge, clarity, faithfulness, coverage]

This prevents scale drift, enables cross-model comparison, and makes the Δ-field analysis meaningful.

Determinism & fairness knobs

- Temperature: 0–0.2 for scoring (stability > creativity).

- Identical context: pass the same goal/answer/context to every model.

- Token budget: trim to decision-critical snippets (but the same trim across models).

- Strict parser: reject outputs that violate the two-line format; log and retry once.

- Provenance: persist

model_name,model_version, prompt hash, and raw two lines.

Quick dimension blocks (drop-in text)

Use these inside the INSTRUCTIONS: section to retarget the same skeleton.

Reasoning

- Reward: explicit steps, correct chains, addressed edge cases, stated assumptions with checks.

- Penalize: leaps, circularity, contradictions, missing preconditions.

Clarity

- Reward: structure (lists, headings), concise phrasing, direct answers first, minimal fluff.

- Penalize: meandering, redundancy, buried ledes, jargon without necessity.

Faithfulness

- Reward: citations to the provided context, explicit limits, “cannot infer” when appropriate.

- Penalize: adding facts not in context, confident-but-wrong restatements.

Coverage

- Reward: touches all major facets implied by the goal, flags omissions explicitly.

- Penalize: single-facet answers to multi-facet questions, unacknowledged gaps.

Minimal parser (pseudo-Python)

def parse_two_line(output: str) -> tuple[str,int]:

lines = [l.strip() for l in output.strip().splitlines() if l.strip()]

assert len(lines) == 2 and lines[0].lower().startswith("rationale:") and lines[1].lower().startswith("score:")

rationale = lines[0].split(":",1)[1].strip()

score = int(lines[1].split(":",1)[1].strip())

assert 0 <= score <= 100

return rationale, score

On failure: record the raw text, one retry with a format-only fixer prompt, then mark as parser_error.

What this buys us downstream

- Auditable judgments (rationales) you can spot-check and learn from.

- Comparable numbers across models and runs (0–1 scale, fixed dimension order).

- Stronger Δ-signals: because each score was produced under the same disciplined reasoning routine.

Common pitfalls & quick fixes

- Drift into narrative → tighten “Be strict and concise”; cap rationale to ~300 chars.

- Dimension bleed → explicitly say “judge only this facet; ignore style/other facets.”

- Over-lenient 80–90s → add concrete failure modes in Penalize and examples of 60–74.

- Parser pain → keep “RETURN FORMAT (exactly two lines)” verbatim, fail fast on mismatch.

Bottom line: Scoring is not a number, it’s a procedure. Give the model a narrow job, unambiguous criteria, and a hard output contract. Do it per dimension, normalize, and log the rationale. That’s how you turn foundation models into consistent, high-signal judges and that’s what makes the gap field visible.

🔎 The Chat-Analyze Agent: how raw chats become labeled training data

This is the piece that turns conversations into numbers. It walks each user→assistant turn, applies our dimension-specific judges, parses the replies, and persists clean, normalized scores your downstream GAP pipeline can trust.

At a high level:

- Ingest turns from memory (or a provided batch).

- Load the right prompt for each dimension (Reasoning, Knowledge, Clarity, Faithfulness, Coverage).

- Call the judge LLM with

(goal, user, assistant[, context]). - Parse the strict 2-line response →

{rationale, score}. - Normalize score

0–100 → 0–1, record provenance, and persist. - Emit per-turn artifacts for dashboards (e.g., show Knowledge on the chat UI).

↩️ What it returns (example)

📊 knowledge_llm Dimension Scores conversation_turn:5962

+------------+-------+--------+----------------------------------------------+

| Dimension | Score | Weight | Rationale (preview) |

+------------+-------+--------+----------------------------------------------+

| reasoning | 95 | 1.0 | Coherent, technically accurate explanation… |

| knowledge | 95 | 1.0 | Correct details of Epistemic HRM Scorer… |

| clarity | 98 | 1.0 | Exceptionally clear and well-structured… |

| faithfulness| 95 | 1.0 | Matches code structure and purpose… |

| coverage | 95 | 1.0 | Addresses all key facets of the question… |

| FINAL | 95.6 | | Weighted average |

+------------+-------+--------+----------------------------------------------+

👩💻 Minimal pseudocode (drop-in mental model)

def run_chat_analyze(context):

# 0) Source turns

turns = context.get("chats") or memory.chats.list_turns_with_texts(

min_assistant_len=50, limit=cfg.limit, order_desc=False

)

analyzed = []

for row in turns:

# Skip if already scored (unless force_rescore)

if row.get("ai_score") and not cfg.force_rescore:

continue

user_txt = row.get("user_text", "").strip()

asst_txt = row.get("assistant_text", "").strip()

if not user_txt or not asst_txt:

continue

# 1) Create/lookup a 'goal' from the user turn (provenance anchor)

goal = memory.goals.get_or_create({

"goal_text": user_txt,

"description": "Created by ChatAnalyzeAgent",

"pipeline_run_id": context.get("pipeline_run_id"),

"meta": {"source": "chat_analyze_agent"},

})

per_dim = {}

for dim in cfg.dimensions: # ["reasoning","knowledge","clarity","faithfulness","coverage"]

# 2) Load the dimension-specific prompt template

prompt = prompt_loader.from_file(f"{dim}.txt", cfg, {**row, **context})

# 3) Call LLM judge

raw = prompt_service.run_prompt(prompt, {**row, **context})

# 4) Parse strict output

parsed = parse_judge(raw) # -> {"rationale": str, "score": int 0..100}

score01 = parsed["score"] / 100.0

per_dim[dim] = ScoreResult(

dimension=dim,

score=score01,

source="knowledge_llm",

rationale=parsed["rationale"],

attributes={"raw_response": raw, "score100": parsed["score"]},

)

# Optional: write Knowledge score back to chat for GUI

if dim == "knowledge":

memory.chats.set_turn_ai_eval(

turn_id=row["id"], score=parsed["score"], rationale=parsed["rationale"]

)

# 5) Persist as one bundle (with provenance)

scoring.save_bundle(

bundle=ScoreBundle(results=per_dim),

scorable=Scorable(id=row["assistant_message_id"], text=asst_txt, target_type=CONVERSATION_TURN),

context={**context, "goal": goal.to_dict()},

cfg=cfg, agent_name="chat_analyze_agent", scorer_name="knowledge_llm", source="knowledge_llm",

model_name="llm",

)

analyzed.append({

"turn_id": row["assistant_message_id"],

"score": per_dim["knowledge"].attributes["score100"], # convenience for UI

"rationale": per_dim["knowledge"].rationale,

})

return {**context, "analyzed_turns": analyzed}

👖 How the prompt loader fits

-

Template per dimension (

reasoning.txt,knowledge.txt, …) contains:- the system role, the input slots (

goal_text,user_text,assistant_text,context,preferences), and - the strict two-line return format.

- the system role, the input slots (

-

The loader simply renders the right template with the turn payload, so judges see identical structure run-to-run.

💰 Implementation tips

- Normalization: always divide the 0–100 by 100 before any analytics (GAP, topology, viz).

- Strict parsing: enforce the 2-line contract; fail closed (raise

ParseError) and log the raw response. - Idempotency: use

force_rescoreto override; otherwise skip already-scored turns. - Provenance: store

turn_id,assistant_message_id,goal_id,scorer_name/version,pipeline_run_id. - Batching & retries: add small jittered retries for judge calls; backoff on rate limits.

- Guardrails: drop turns with missing text, or those exceeding your token/char budget for the judges.

With this agent in place, you get a clean, reproducible labeled corpus that reflects how well answers perform along our five dimensions ready for GAP analysis, model training, and Visual-AI diagnostics.

🏗️ Model Building

(HRM + Tiny) and a shared protocol so they can actually be compared

This section is about how we build the models and, more importantly, how we make their outputs commensurable.

🤷 What we’re building

-

HRM (re-intro) We reuse the HRM architecture from our earlier post (linking there for details), and treat it as our high-fidelity reference scorer.

-

Tiny (from scratch) We implement the Tiny scorer end-to-end: model → trainer → scorer. Tiny is intentionally small and fast so we can iterate quickly, ship to edge boxes, and stress the tooling.

-

A shared protocol of attributes We extend both HRM and Tiny to report the same, standardized set of diagnostic attributes alongside their per-dimension scores. This protocol is what lets us align two very different systems without forcing one to imitate the other’s internals.

Why this matters: direct “metric mapping” (e.g., “uncertainty ≈ logvar here, ≈ entropy there”) looked neat on paper but failed in practice different models compute/represent those notions differently. Our fix is to define the outputs we want (common semantics) and make each model emit them in a canonical format.

🤝 The shared protocol SCM

We call the protocol SCM (Shared Canonical Metrics). Each model must produce:

-

Per-dimension, normalized scores (0–1)

scm.reasoning.score01,scm.knowledge.score01,scm.clarity.score01,scm.faithfulness.score01,scm.coverage.score01 -

Aggregate

scm.aggregate01simple average over the five dimensions (or a documented weighted average) -

Process diagnostics (model-agnostic definitions)

scm.uncertainty01normalized predictive uncertainty (e.g., entropy normalized by log-vocab)scm.ood_hat01out-of-distribution proxy (e.g., PPL normalized to a band)scm.consistency01internal consistency proxy (e.g., sigmoid of mean logprob blended with 1−uncertainty)scm.length_norm01normalized token length to discourage score inflation by verbosityscm.temp01temperature proxy (we mirror uncertainty here so downstream plots have a stable axis)scm.agree_hat01agreement proxy (e.g., logistic transform of mean logprob)

These are not the models’ native losses or hidden states; they’re standardized readouts. Each model computes its own way to populate them, but the semantics and scale are fixed so Δ-analysis (A−B) is well-posed.

🎨 What the section will cover

-

HRM recap (short, with link): objectives, inductive biases, where it shines.

-

Tiny build (full):

- architecture choices and why (small, stable, debuggable)

- training loop (datasets from Chat-Analyze Agent, loss design, stability guardrails)

- evaluation harness and scorer interface

-

Protocol integration (both models): how we compute each SCM field, normalization details, and test vectors to verify parity.

-

Why this protocol beats ad-hoc mappings: examples where naive “map X→Y” failed, and how SCM gives clean apples-to-apples deltas.

Bottom line: We’re not trying to make Tiny “be HRM.” We’re making both models speak the same measurement language. Once they emit SCM, we can compare, visualize, and reason about the gap field with confidence.

👑 The Hierarchical Reasoning Model: A Deep Reasoning Engine

While Tiny is our fast inner loop, HRM is the deep, high-fidelity judge we lean on when we need comprehensive reasoning diagnostics.I

💖 We have seen HRM before

| What | Description |

|---|---|

| 📚 Layers of Thought | Blog post where we go over how we integrated HRM into Stephanie |

| 🧑🎤 Model | Model Implementation Source Code |

| 🏋️♀️ Trainer | Class Used to train the HRM model |

| ⚽ Scorer | The scoring implementation for the HRM class |

👯 The Dual-Recurrent Architecture

HRM’s power comes from its two coupled recurrent networks operating at different temporal scales:

# Hierarchical recurrent modules

self.l_module = RecurrentBlock(2 * self.h_dim, self.l_dim, name="LModule")

self.h_module = RecurrentBlock(self.l_dim + self.h_dim, self.h_dim, name="HModule")All right can you please address this

This creates a processing hierarchy where:

- Low-level (L) module performs fine-grained analysis (4 steps per cycle)

- High-level (H) module integrates information across longer time horizons (1 step per cycle)

During evaluation, HRM executes this hierarchical processing across multiple cycles:

for cycle in range(self.n_cycles):

# Low-level fine-grained processing (T steps)

for step in range(self.t_steps):

l_input = torch.cat([x_tilde, zH], dim=-1)

zL = self.l_module(zL, l_input)

# High-level abstract update (1 step per cycle)

h_input = torch.cat([zL, zH], dim=-1)

zH = self.h_module(zH, h_input)

This dual-frequency approach allows HRM to capture both detailed reasoning steps and higher-level patterns making it particularly effective for complex, multi-hop reasoning tasks.

🟰 Multi-Dimensional Quality Assessment

Unlike simple scoring systems, HRM generates a rich diagnostic surface across five key reasoning dimensions we’ve defined:

| Dimension | What HRM Measures |

|---|---|

| Reasoning | Logical structure, multi-hop soundness, handling of assumptions |

| Knowledge | Factual accuracy and specificity |

| Clarity | Organization, readability, and directness |

| Faithfulness | Consistency with context/goal, absence of hallucination |

| Coverage | Completeness across key facets |

For each dimension, HRM doesn’t just produce a score it generates a comprehensive diagnostic profile:

# Core diagnostic heads

self.score_head = nn.Linear(self.h_dim, 1) # quality logits

self.logvar_head = nn.Linear(self.h_dim, 1) # aleatoric uncertainty

self.aux3_head = nn.Linear(self.h_dim, 3) # bad/medium/good aux

self.disagree_head = nn.Linear(self.h_dim, 1) # predicted disagreement

self.consistency_head = nn.Linear(self.h_dim, 1) # robustness proxy

self.ood_head = nn.Linear(self.h_dim, 1) # OOD proxy

# (optionally) temperature / calibration head for score scaling

This produces not just a score (0-100), but also:

uncertainty: How confident is this score?consistency_hat: How robust is the score to input variations?ood_hat: Is this response out-of-distribution?jacobian_fd: How sensitive is the score to tiny input changes?

🔗 How HRM populates the shared protocol (SCM)

To compare HRM with Tiny, both speak SCM (Shared Canonical Metrics). HRM fills:

| SCM field | How HRM computes it (intuition) |

|---|---|

scm.<dim>.score01 |

sigmoid(calibrated(score_head)) per dimension → [0,1] |

scm.aggregate01 |

mean of the five score01 (or documented weighted mean) |

scm.uncertainty01 |

normalized entropy / uncertainty from logvar_head or logits |

scm.consistency01 |

blend of sigmoid(mean_logprob) and 1−uncertainty01 |

scm.ood_hat01 |

normalized proxy from ood_head or PPL banding |

scm.length_norm01 |

token-length min–max clamp to [0,1] |

scm.temp01 |

mirrors uncertainty (stable axis for visuals) |

scm.agree_hat01 |

agreement proxy from score logit / mean logprob |

Scale discipline: HRM produces score01 ∈ [0,1] for SCM; UI “/100” views are derived by

round(100*score01)for readability.

🏅 Why HRM Matters for This Comparison

HRM serves as our gold-standard reasoning evaluator the deep, comprehensive system against which we measure Tiny’s lightweight approach. The key insight is that HRM and Tiny aren’t competing systems they’re complementary layers in Stephanie’s cognitive architecture.

HRM is designed for:

- Deep multi-step reasoning validation

- Complex plan analysis

- Comprehensive quality assessment

While powerful, HRM’s strength comes with computational cost making it less suitable for:

- Real-time refinement

- Edge deployment

- Continuous self-correction

This is precisely where Tiny enters the picture, not to replace HRM but to amplify it with a fast, recursive inner loop that handles the “polishing” work before responses reach users or trigger deeper HRM analysis.

By understanding HRM’s deep reasoning capabilities, we can better appreciate how Tiny’s lightweight approach captures the essential patterns that make reasoning good without the computational overhead.

❗ The Disagree Head what it is (and why it matters)

We reference a “disagree head” in diagrams; here’s the explicit meaning:

-

What it predicts: A proxy for where Tiny and HRM are likely to diverge on quality for the same input.

-

How it trains: Using past pairs where we observed an absolute delta (e.g.,

|score01_hrm − score01_tiny|) above a margin; we treat that as a target disagreement event. The head learns a logit → probability that such a divergence will occur again on similar patterns. -

How we use it:

- If

sigmoid(disagree_head)is high, route the case to HRM (don’t trust Tiny alone). - If low, Tiny’s light-weight signal is usually safe, keeping latency down.

- If

SCM mapping: scm.agree_hat01 = 1 − sigmoid(disagree_head) gives a standardized agreement confidence (1 = likely to agree).

Intuition: the head isn’t “reading Tiny’s mind”; it learns situations (content/process patterns in

zH) where Tiny historically missed nuance HRM caught (e.g., multi-hop edge cases, subtle factual grounding).

🧯 Stability guardrails (what we fixed)

Earlier we hit heteroscedastic loss blow-ups (exp(-log_var)) on non-reasoning heads. Fixes:

- Softplus floor on

log_var(prevents extreme negatives), - Gradient clipping across heads,

- Per-head loss caps to stabilize batches.

Result: all five dimensions train cleanly; numbers stay finite.

graph TD

%% Title and Input Section

A[🎯 HRM Hierarchical Reasoning Model<br/>Multi-Head Architecture] --> B[📥 Input Layer]

B --> C[🔮 Input Projector<br/>x → x̃]

%% Hierarchical Core Processing

C --> D{🔄 Hierarchical Core<br/>Dual Recurrent Processing}

D --> E[🐢 Low-Level Module L<br/>Fine-grained Analysis<br/>T steps per cycle]

D --> F[🐇 High-Level Module H<br/>Abstract Reasoning<br/>1 step per cycle]

E --> G[🔄 State Feedback Loop]

F --> G

G --> D

%% Final States

D --> H[💎 Final States<br/>zL_final + zH_final]

%% Primary Scoring Pathway

H --> I[🌡️ Temperature Head<br/>τ calibration]

H --> J[⭐ Score Head<br/>Quality logits]

I --> K[🎯 Primary Score<br/>score01 ∈ 0,1<br/>Temperature calibrated]

J --> K

%% Uncertainty & Confidence Heads

H --> L[📊 LogVar Head<br/>Aleatoric uncertainty]

H --> M[🔢 Aux3 Head<br/>Bad/Medium/Good]

L --> N[✅ Certainty01<br/>Uncertainty measure]

M --> O[📶 Entropy Aux<br/>Confidence score]

%% Agreement & Robustness Heads

H --> P[⚔️ Disagree Head<br/>HRM-Tiny disagreement]

H --> Q[🛡️ Consistency Head<br/>Robustness prediction]

P --> R[🔄 Disagree Hat<br/>Predicted disagreement]

Q --> S[🎯 Consistency Hat<br/>Robustness score]

%% Specialized Diagnostic Heads

H --> T[🚫 OOD Head<br/>Out-of-distribution]

H --> U[🔁 Recon Head<br/>Input reconstruction]

H --> V[📏 Jacobian FD<br/>Sensitivity analysis]

T --> W[🎯 OOD Hat<br/>Anomaly detection]

U --> X[📐 Recon Sim<br/>Comprehension quality]

V --> Y[📊 Jacobian FD<br/>Input sensitivity]

%% Evidence Accumulation

H --> Z[🛑 Halt Signal<br/>Evidence accumulation]

Z --> AA[🎲 Halt Prob<br/>Pseudo-halting]

%% Styling and Grouping

classDef input fill:#e3f2fd,stroke:#1976d2,stroke-width:2px,color:#0d47a1

classDef core fill:#fff3e0,stroke:#e65100,stroke-width:3px

classDef primary fill:#e8f5e8,stroke:#2e7d32,stroke-width:3px

classDef uncertainty fill:#fce4ec,stroke:#c2185b,stroke-width:2px

classDef agreement fill:#e3f2fd,stroke:#1565c0,stroke-width:2px

classDef diagnostic fill:#f3e5f5,stroke:#7b1fa2,stroke-width:2px

classDef evidence fill:#fff8e1,stroke:#ff8f00,stroke-width:2px

class A,B,C input

class D,E,F,G core

class I,J,K primary

class L,M,N,O uncertainty

class P,Q,R,S agreement

class T,U,V,W,X,Y diagnostic

class Z,AA evidence

%% Legend

subgraph Legend[📖 Legend - Head Types]

L1[🟩 Primary Scoring] --> L2[🟥 Uncertainty & Confidence]

L2 --> L3[🟦 Agreement & Robustness]

L3 --> L4[🟪 Specialized Diagnostics]

L4 --> L5[🟨 Evidence Accumulation]

end

🎯 Why Tiny? And How the Gap Emerged

We didn’t set out to “prove a theory.” We saw the Tiny paper on Hugging Face, loved the idea of a compact DNN that sits between heavyweight models and applications, and knew instantly it fit Stephanie’s architecture. We implemented it because it was useful fast, small, and easy to deploy where HRM is too heavy. That was the whole plan.

Then something clicked.

🧮 From “stacking signals” to “subtracting signals”

Our first idea was straightforward: append Tiny’s diagnostics to HRM’s output to get more information per turn. Extra signal, same data. Great.

But while wiring that up, we asked a different question: What if we subtract instead of append?

If two evaluators look at the same conversation and we align their outputs into the same schema (SCM), then the difference between them should reveal something real:

$$[ \Delta(h) = \text{HRM}(h) - \text{Tiny}(h) ]$$We didn’t know there was anything meaningful in Δ. We suspected there had to be call it a Holmes-style deduction: remove everything both models agree on; what remains is the interesting part.

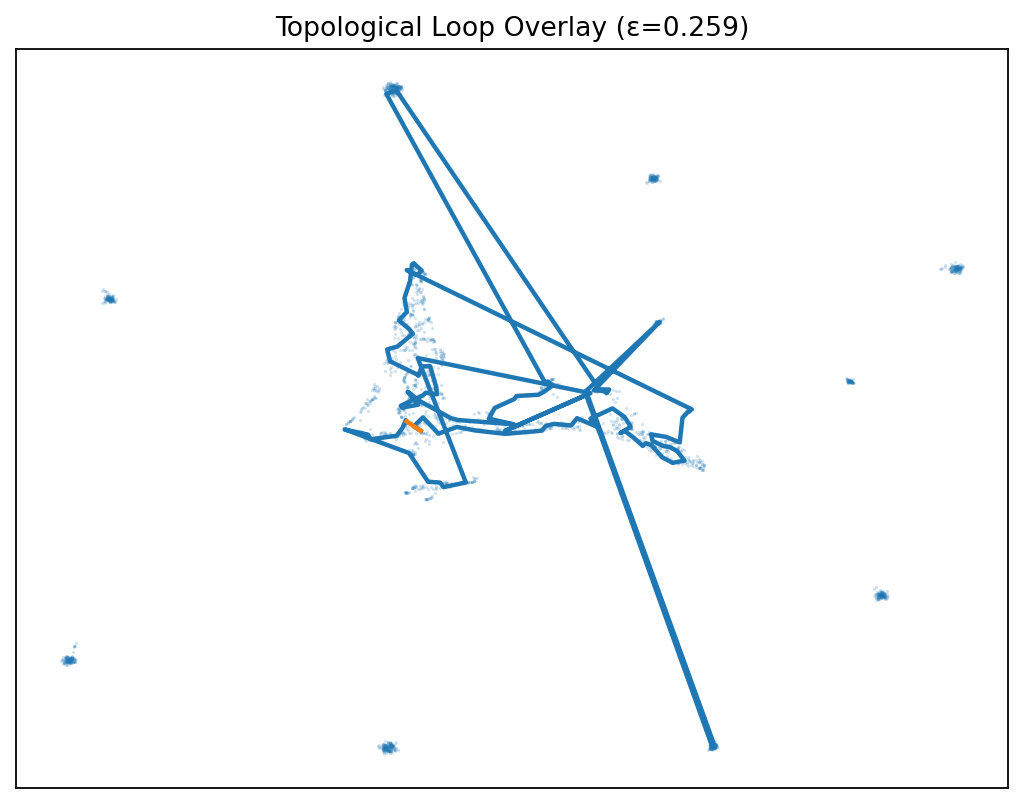

✔️ The moment of confirmation: Betti numbers

We ran persistent homology on Δ and the Betti-1 counts spiked consistently. The topology of the gap wasn’t noise; it had structure (loops) that stayed under resampling. That was the “oh wow” moment. We still can’t name the exact cause of every loop but like electricity, you don’t have to fully explain it to measure, improve, and use it.

🎯 What we’re actually after

Our north star is self-improving AI especially learning from hallucinations rather than just suppressing them. Tiny gave us a new lens. HRM gave us depth. Δ gave us a map of where evaluators diverge in systematic ways. That map is where:

- escalation policies get smart (when Tiny says “I’m uncertain/OOD/disagree,” hand off to HRM),

- training data gets targeted (hotspots on the Δ field),

- and future posts (next: “learning from hallucination”) get their raw material.

👍 Why Tiny was the right instrument (even before Δ)

- It runs in milliseconds and can live at the edge or in inner loops.

- It produces diagnostics (uncertainty, OOD, sensitivity, agreement) we can align with HRM via SCM.

- It gave us a second, independently trained viewpoint on the same data exactly what you need to make Δ meaningful.

📑 How to replicate the pivot (in three steps)

- Align: score the same conversations with two evaluators (we used HRM and Tiny) and write both to SCM (0–1, same keys/order).

- Subtract: compute (\Delta = A - B) per turn (dimension-wise).

- Probe: run PH (Vietoris–Rips), examine Betti-1 persistence; cluster Δ-hotspots for inspection and distillation.

We started by stacking, but the real insight came from subtracting. Tiny didn’t just add signal it revealed where signal differs, and that’s the raw ore we can mine.

❌ What this isn’t

- It’s not a claim that Tiny “beats” HRM. They’re complementary.

- It’s not a proof of a specific cognitive mechanism. It’s a measurement pipeline that repeatedly shows structure where naïvely you’d expect noise.

🔜 What comes next

- We’ll use Δ-hotspots to drive targeted training and hallucination learning (next post).

- We’ll keep strengthening SCM so any new scorer can be dropped in and compared locally or on HF.

That’s the honest path: we implemented Tiny because it was obviously useful. The gap emerged when we switched from adding to subtracting, and the topology told us we’d found something worth pursuing.

🌀 The Tiny Recursion Model and our instrumented Tiny+

Tiny is a small, recursive evaluator that operates directly in embedding space. It produces a fast, multi-signal judgment about a response (quality, uncertainty, OOD, sensitivity, etc.). Tiny+ is our instrumented version of Tiny: same core, but wired into our SCM (Shared Canonical Metrics) protocol and extended with a few probes that make Tiny and HRM directly comparable for Δ-field analysis.

One-liner: Tiny is a general-purpose, parameter-efficient evaluator; Tiny+ is how we adapted it for Stephanie’s gap work.

🤔 Why Tiny exists (beyond our stack)

Tiny stands on its own. It’s useful wherever you need cheap, consistent signals about model outputs:

- Edge / low-latency scoring on CPU

- Cost-aware routing (decide when to call a heavier judge/model)

- Eval/A-B pipelines as a stable, repeatable rater

- Drift & health monitoring (OOD + sensitivity) on production traffic

- Retrieval/reranking (blend quality, uncertainty, and stability)

- Teacher–assistant distillation (soft targets + confidence)

We use Tiny+ inside Stephanie to align with HRM and visualize Δ, but the architecture is system-agnostic.

🏢 Architecture at a glance

graph TD

%% Title and Input Section

A["🤖 Tiny Recursion Model (Tiny+)<br/>Multi-Head Recursive Architecture"All right] --> B[🎯 Triple Input Layer]

B --> C[📥 Goal Embedding x]

B --> D[💬 Response Embedding y]

B --> E[🌀 Initial Latent z]

%% Recursive Fusion Core

C --> F{🔄 Recursive Fusion Core<br/>N Recursion Steps}

D --> F

E --> F

F --> G["🔗 State Fusion<br/>x ⊕ y ⊕ z → z_next"]

G --> H[🏗️ Core Processing<br/>MLP/Attention Blocks]

H --> I[🛑 Halting Signal<br/>Step-wise accumulation]

I --> J[⚖️ Residual Update<br/>z = z + step_scale × z_next]

J --> F

%% SAE Bottleneck

F --> K[💎 Final State z_final]

K --> L[🧠 Sparse Autoencoder<br/>SAE Bottleneck]

L --> M[🔍 Concept Codes c<br/>Sparse representation]

L --> N[🎛️ Head State z_head<br/>SAE reconstruction]

%% Primary Scoring Pathway

N --> O[🌡️ Temperature Head<br/>τ calibration]

N --> P[⭐ Score Head<br/>Quality logits]

O --> Q["🎯 Primary Score<br/>s ∈ 0,1<br/>Temperature calibrated"]

P --> Q

%% Uncertainty & Confidence Heads

N --> R[📊 LogVar Head<br/>Aleatoric uncertainty]

N --> S[🔢 Aux3 Head<br/>Bad/Medium/Good]

R --> T[✅ Certainty01<br/>Uncertainty measure]

S --> U[📶 Entropy Aux<br/>Confidence score]

%% Agreement & Disagreement Heads

N --> V[⚔️ Disagree Head<br/>HRM-Tiny disagreement]

N --> W[🤝 Agree Head<br/>Cross-model agreement]

V --> X[🔄 Disagree Hat<br/>Predicted disagreement]

W --> Y[🎯 Agree01<br/>Agreement probability]

%% Robustness & Reconstruction Heads

N --> Z[🛡️ Consistency Head<br/>Robustness prediction]

N --> AA[🔁 Recon Head<br/>Response reconstruction]

Z --> BB[🎯 Consistency Hat<br/>Robustness score]

AA --> CC[📐 Recon Sim<br/>Reconstruction quality]

%% Specialized Diagnostic Heads

N --> DD[🚫 OOD Head<br/>Out-of-distribution]

N --> EE[📏 Jacobian FD<br/>Sensitivity analysis]

N --> FF[📏 Causal Sens Head<br/>Perturbation sensitivity]

DD --> GG[🎯 OOD Hat<br/>Anomaly detection]

EE --> HH[📊 Jacobian FD<br/>Input sensitivity]

FF --> II[🎯 Sens01<br/>Sensitivity measure]

%% Length Normalization

JJ[📏 Sequence Length] --> KK[⚖️ Length Effect<br/>Normalization]

KK --> LL[📐 Len Effect<br/>Length adjustment]

%% Legacy Outputs

N --> MM[📚 Classifier Head<br/>Legacy vocab logits]

I --> NN[🛑 Halt Logits<br/>Step accumulation]

%% Styling and Grouping

classDef input fill:#e1f5fe,stroke:#01579b,stroke-width:2px

classDef core fill:#fff3e0,stroke:#e65100,stroke-width:3px

classDef sae fill:#e8f5e8,stroke:#2e7d32,stroke-width:3px

classDef primary fill:#e8f5e8,stroke:#2e7d32,stroke-width:3px

classDef uncertainty fill:#fce4ec,stroke:#c2185b,stroke-width:2px

classDef agreement fill:#e3f2fd,stroke:#1565c0,stroke-width:2px

classDef robustness fill:#fff3e0,stroke:#ff6f00,stroke-width:2px

classDef diagnostic fill:#f3e5f5,stroke:#7b1fa2,stroke-width:2px

classDef legacy fill:#f5f5f5,stroke:#616161,stroke-width:2px

class A,B,C,D,E input

class F,G,H,I,J core

class L,M,N sae

class O,P,Q primary

class R,S,T,U uncertainty

class V,W,X,Y agreement

class Z,AA,BB,CC robustness

class DD,EE,FF,GG,HH,II diagnostic

class JJ,KK,LL,MM,NN legacy

%% Legend

subgraph Legend[📖 Legend - Head Types]

L1[🟩 Primary Scoring] --> L2[🟥 Uncertainty & Confidence]

L2 --> L3[🟦 Agreement & Disagreement]

L3 --> L4[🟧 Robustness & Reconstruction]

L4 --> L5[🟪 Specialized Diagnostics]

L5 --> L6[⬜ Legacy & Utilities]

end

Inputs

x: goal/condition embedding[B, D]y: response embedding[B, D]z: latent state (init zeros)[B, D]

Core loop (n recursions)

- Fuse

[x, y, z] → z'viaLinear + tanh - Process with a compact stack (MLP or MHA blocks)

- Update

z ← z + step_scale * z'(residual step) - Track halting logits (max over steps)

Bottleneck

- SAE (Sparse Autoencoder) over

z_final→ sparse codesc - Reconstruct to

z_headfor all output heads (acts like a concept layer)

Heads (multi-task)

- Score, (log) uncertainty, OOD, consistency/robustness, temperature proxy

- Agreement/disagreement (for parity), finite-difference sensitivity

- Reconstruction similarity (for concept fidelity)

# Core residual block (MLP) or attention variant

class TinyBlock(nn.Module): ...

class TinyBlockAttn(nn.Module): ...

# Recursion (sketch)

fused = torch.cat([x, y, z], -1)

z_next = torch.tanh(W @ fused) → core blocks

z = z + step_scale * z_next # repeat n times

# SAE bottleneck → heads

c = SAE_enc(z_final) # sparse codes

z_head = z_final + SAE_dec(c) # head input

# Heads read from z_head (score/logvar/ood/consistency/etc.)

🚧 Numerical safety & calibration (baked in)

- Aleatoric clamp:

log_var ∈ [−5, 5](prevents precision explosions) - Temperature calibration:

tau = 0.5 + 0.5 * softplus(·),score01 = σ(score_logit / tau)(stable, calibrated) - Sensitivity proxy: finite-difference on a normalized perturbation, clipped

- Length proxy:

length_norm01 = (tanh(len/L)+1)/2avoids “long = always bad”

These keep Tiny stable across 4k–8k token regimes and varied difficulty.

🗣️ Heads → SCM (so Tiny and HRM speak the same language)

Tiny+ writes aligned telemetry into SCM, so Δ = HRM − Tiny is meaningful:

| SCM key | Tiny(+ ) source (range) | Meaning |

|---|---|---|

scm.<dim>.score01 |

σ(score_logit / tau) (0..1) |

Per-dimension quality (copy to each dim you evaluate) |

scm.aggregate01 |

mean of per-dim score01 |

Overall quality |

scm.uncertainty01 |

1 − σ(−log_var) (0..1) |

Aleatoric uncertainty |

scm.consistency01 |

σ(consistency_logit) (0..1) |

Robustness to masking/perturbations |

scm.ood_hat01 |

σ(ood_logit) (0..1) |

Out-of-distribution proxy |

scm.temp01 |

σ(tau_raw) (0..1) |

Temperature/entropy proxy (alignment key) |

scm.jacobian_fd |

clipped FD sensitivity (0..1) | Score sensitivity to small input changes |

scm.length_norm01 |

bounded length proxy (0..1) | Normalized response length effect |

scm.agree_hat01 |

1 − σ(disagree_logit) (0..1) |

Predicted agreement with a reference judge (HRM in our stack) |

scm.recon_sim01 |

cosine(ŷ, y) mapped to (0..1) | Concept fidelity via SAE reconstruction |

Bonus: expose

concept_sparsityfor Visual-AI panes (instant concept heat).

➕ Why Tiny is more than “HRM but small”

Different objective and operating point:

- HRM does deep semantic validation; Tiny diagnoses meta-signals agreement, uncertainty, OOD, sensitivity that tell you when light-weight judgment is safe vs. when to escalate.

- Tiny runs on fixed embeddings with compact recursion; it’s cheap enough for the inner loop and edge.

The combination builds a cost-aware evaluator: Tiny handles the confident in-distribution mass; it forwards the risky tail to HRM.

🗳️ Practical decision patterns

- High

ood_hat01+ highagree_hat01→ unusual, but HRM likely agrees → ok to serve. - High

ood_hat01+ lowagree_hat01→ unusual and likely disagreement → escalate. - High

jacobian_fd+ lowconsistency01→ fragile judgment → escalate. - High

uncertainty01+ lowtemp01→ uncertain & poorly calibrated → caution.

👀 SAE bottleneck: making the unseen visible

The SAE forces a sparse concept code. Two wins:

- Interpretability:

concept_sparsityandrecon_sim01show when Tiny relies on a small, stable set of “ideas” vs. diffuse noise. - Transfer: concepts that remain predictive across datasets tend to align with durable reasoning patterns great anchors for Δ-attribution.

👶 Minimal “generic” API (no Stephanie dependencies)

# Example usage outside Stephanie

x = embed(goal_text) # [B, D]

y = embed(response_text) # [B, D]

z0 = torch.zeros_like(x)

_, _, _, aux = tiny(x, y, z0, seq_len=len_tokens(response_text), return_aux=True)

result = {

"score01": float(aux["score"].mean()),

"uncertainty01": float(aux["uncertainty01"].mean()),

"ood01": float(aux["ood_hat01"].mean()),

"consistency01": float(aux["consistency_hat"].mean()),

"sensitivity01": float(aux["jacobian_fd"].mean()),

"length_norm01": float(aux["length_norm01"].mean()),

}

# Use for routing, monitoring, ranking, or eval.

If you adopt SCM, just map these to your preferred keys (we use scm.* for cross-model alignment).

🎲 Design choices that mattered

- Clamp

log_varand deriveuncertainty01 = 1 − σ(−log_var)(monotone, stable, interpretable). - Use

temp01 = σ(tau_raw)for alignment; keeptaufor calibration math. - Sensitivity proxy: normalize perturbations and clip

jacobian_fd. - SAE α ≈ 0.05: enough sparsity pressure without crushing expressivity.

- Length proxy: bounded

tanh(len/L)mapped to[0,1]avoids pathological length effects.

🌀 Tiny+ in Stephanie (what’s different)

- SCM wiring so Tiny and HRM align 1:1

- Agreement/disagreement head tuned for HRM parity and Δ analysis

- Visual-AI first: outputs designed to render as “turns × features” images for instant diagnosis

This is how we compute Δ = HRM − Tiny per turn/dimension and then study its topology (e.g., persistent loops).

📅 What’s next in this post

Up next is the full Tiny source (model), followed by the trainer and the scorer wrapper. If you just want the gist, the sections above are enough to implement a compatible Tiny. If you enjoy digging into details: the code that follows is production-hardened, numerically safe, and instrumented for Δ-field work.

Tiny Recursion Model: View full source

# stephanie/scoring/model/tiny_recursion.py

"""

Tiny Recursion Model (Tiny+) - Parameter-Efficient Recursive Neural Architecture

This module implements a compact, recursive neural network for multi-task evaluation

of AI model responses. The architecture combines recursive state updates with

multi-head output predictions, enabling efficient quality assessment across

multiple dimensions from embedding inputs.

Key Innovations:

- Recursive latent state updates with halting mechanisms

- Sparse Autoencoder (SAE) bottleneck for interpretable concepts

- Multi-head prediction for comprehensive quality assessment

- Heteroscedastic uncertainty estimation

- In-graph consistency regularization

Architecture Overview:

1. Recursive fusion of goal (x), response (y), and latent (z) states

2. Core processing blocks (attention or MLP-based)

3. SAE bottleneck for sparse concept representation

4. Multi-head prediction for scores, uncertainty, and auxiliary tasks

"""

from __future__ import annotations

from typing import Any, Dict, Optional, Tuple

import torch

import torch.nn as nn

import torch.nn.functional as F

# ---------------------------

# Core Building Blocks

# ---------------------------

class TinyBlock(nn.Module):

"""

Basic residual block: LayerNorm → MLP → residual connection.

Supports both 2D [batch, features] and 3D [batch, sequence, features] inputs.

Uses GELU activation and dropout for regularization.

"""

def __init__(self, d_model: int, dropout: float = 0.1):

super().__init__()

self.ln = nn.LayerNorm(d_model)

self.mlp = nn.Sequential(

nn.Linear(d_model, d_model * 4), # Expansion factor 4

nn.GELU(),

nn.Dropout(dropout),

nn.Linear(d_model * 4, d_model), # Projection back

nn.Dropout(dropout),

)

def forward(self, x: torch.Tensor) -> torch.Tensor:

"""Apply residual block: x + MLP(LayerNorm(x))"""

return x + self.mlp(self.ln(x))

class TinyBlockAttn(nn.Module):

"""

Attention-enhanced residual block with Multi-Head Self-Attention.

Architecture: LN → MHA → residual → TinyBlock → residual

Automatically handles 2D/3D inputs and returns same dimensionality.

"""

def __init__(self, d_model: int, n_heads: int = 4, dropout: float = 0.1):

super().__init__()

self.ln_attn = nn.LayerNorm(d_model)

self.attn = nn.MultiheadAttention(

embed_dim=d_model,

num_heads=n_heads,

dropout=dropout,

batch_first=True # [batch, seq, features]

)

self.drop = nn.Dropout(dropout)

self.ff = TinyBlock(d_model, dropout=dropout)

def forward(self, x: torch.Tensor) -> torch.Tensor:

"""

Forward pass with automatic shape handling.

Args:

x: Input tensor of shape [B, D] or [B, L, D]

Returns:

Output tensor with same shape as input

"""

squeeze_back = False

if x.dim() == 2:

x = x.unsqueeze(1) # [B, D] → [B, 1, D]

squeeze_back = True

q = k = v = self.ln_attn(x)

h, _ = self.attn(q, k, v, need_weights=False)

x = x + self.drop(h) # Residual connection

x = self.ff(x) # Feed-forward with residual

if squeeze_back:

x = x.squeeze(1) # [B, 1, D] → [B, D]

return x

# ---------------------------

# Tiny Recursion Model (Tiny+)

# ---------------------------

class TinyRecursionModel(nn.Module):

"""

Parameter-efficient recursive model for multi-task evaluation.

Recursively updates latent state z using goal (x) and response (y) embeddings

over multiple steps. Features comprehensive multi-head prediction and

sparse autoencoder bottleneck for interpretable representations.

Core Components:

- Recursive state fusion: [x, y, z] → z'

- Core processing stack: Attention or MLP blocks

- SAE bottleneck: Sparse concept encoding

- Multi-head prediction: 12 specialized output heads

Inputs:

x: Goal/condition embedding [B, D]

y: Response embedding [B, D]

z: Initial latent state [B, D] (typically zeros)

Outputs:

logits: Classification logits [B, vocab_size] (legacy compatibility)

halt_logits: Halting signal logits [B]

z_final: Final latent state after recursion [B, D]

aux: Dictionary of auxiliary predictions and metrics

"""

def __init__(

self,

d_model: int = 256,

n_layers: int = 2,

n_recursions: int = 6,

vocab_size: int = 1024,

use_attention: bool = False,

dropout: float = 0.1,

attn_heads: int = 4,

step_scale: float = 0.1, # Residual scaling for state updates

consistency_mask_p: float = 0.10, # Mask probability for consistency regularization

len_norm_L: float = 512.0, # Length normalization constant

enable_agree_head: bool = True, # Enable agreement prediction head

enable_causal_sens_head: bool = True, # Enable sensitivity prediction head

):

super().__init__()

# Model configuration

self.d_model = d_model

self.n_layers = n_layers

self.n_recursions = n_recursions

self.vocab_size = vocab_size

self.use_attention = use_attention

self.step_scale = step_scale

self.consistency_mask_p = consistency_mask_p

self.len_norm_L = float(len_norm_L)

self.enable_agree_head = enable_agree_head

self.enable_causal_sens_head = enable_causal_sens_head

# Core processing stack

if use_attention:

blocks = [TinyBlockAttn(d_model, n_heads=attn_heads, dropout=dropout)

for _ in range(n_layers)]

else:

blocks = [TinyBlock(d_model, dropout=dropout) for _ in range(n_layers)]

self.core = nn.Sequential(*blocks)

# State fusion: combine goal, response, and latent states

self.z_proj = nn.Linear(d_model * 3, d_model) # [x, y, z] → z'

self.final_ln = nn.LayerNorm(d_model)

# Core prediction heads

self.halt_head = nn.Linear(d_model, 1) # Halting signal logits

self.classifier = nn.Linear(d_model, vocab_size) # Legacy classification

# Extended prediction heads

self.score_head = nn.Linear(d_model, 1) # Quality score ∈ [0,1]

self.logvar_head = nn.Linear(d_model, 1) # Aleatoric uncertainty (log-variance)

self.aux3_head = nn.Linear(d_model, 3) # 3-way classification

self.disagree_head = nn.Linear(d_model, 1) # Disagreement prediction

self.recon_head = nn.Linear(d_model, d_model) # Embedding reconstruction

self.consistency_head = nn.Linear(d_model, 1) # Robustness prediction

self.ood_head = nn.Linear(d_model, 1) # OOD detection

self.temp_head = nn.Linear(d_model, 1) # Temperature calibration

# Bridge heads

self.agree_head = nn.Linear(d_model, 1) # Cross-model agreement

self.causal_sens_head = nn.Linear(d_model, 1) # Perturbation sensitivity

# Sparse Autoencoder (SAE) bottleneck

self.sae_enc = nn.Sequential(

nn.Linear(d_model, d_model // 2), # Compression

nn.ReLU(),

nn.LayerNorm(d_model // 2),

)

self.sae_dec = nn.Linear(d_model // 2, d_model) # Reconstruction

self.sae_alpha = 0.05 # SAE reconstruction loss weight

# Regularization

self.head_drop = nn.Dropout(dropout)

@staticmethod

def _cos01(a: torch.Tensor, b: torch.Tensor, dim: int = -1, eps: float = 1e-6) -> torch.Tensor:

"""

Compute cosine similarity mapped from [-1, 1] to [0, 1].

Args:

a, b: Input tensors to compare

dim: Dimension for cosine computation

eps: Numerical stability term

Returns:

Cosine similarity in range [0, 1] where 1 = identical

"""

sim = F.cosine_similarity(a, b, dim=dim, eps=eps)

return (sim + 1.0) * 0.5

def _recur(

self,

x: torch.Tensor,

y: torch.Tensor,

z: torch.Tensor,

) -> Tuple[torch.Tensor, torch.Tensor, torch.Tensor, torch.Tensor, torch.Tensor]:

"""

Execute recursive state updates over n_recursions steps.

Process:

1. Fuse [x, y, z] → z_next via projection and activation

2. Process through core network stack

3. Update halting signals

4. Apply residual state update: z = z + step_scale * z_next

5. Apply SAE bottleneck to final state

Args:

x: Goal embedding [B, D]

y: Response embedding [B, D]

z: Initial latent state [B, D]

Returns:

z_final: Final latent state after recursion [B, D]

z_head: SAE-processed state for prediction heads [B, D]

halt_logits: Maximum halting logits across steps [B, 1]

tau: Temperature parameter for score calibration [B, 1]

c: Sparse concept codes from SAE bottleneck [B, D//2]

"""

B = x.size(0)

device = x.device

# Initialize halting signals to very negative values

halt_logits = torch.full((B, 1), -1e9, device=device)

z_cur = z # Current latent state

# Recursive state updates

for _ in range(self.n_recursions):

fused = torch.cat([x, y, z_cur], dim=-1) # [B, 3 * D]

z_next = torch.tanh(self.z_proj(fused)) # [B, D] with saturation

z_next = self.core(z_next) # [B, D] core processing

# Update halting signal (track maximum across steps)

step_halt = self.halt_head(self.final_ln(z_next)) # [B, 1]

halt_logits = torch.maximum(halt_logits, step_halt)

# Residual state update with step scaling

z_cur = z_cur + self.step_scale * z_next

# Final normalization

z_final = self.final_ln(z_cur) # [B, D]

# Sparse Autoencoder bottleneck

c = self.sae_enc(z_final) # [B, D//2] concept codes

z_head = z_final + self.sae_dec(c) # [B, D] with SAE reconstruction

z_head = self.head_drop(z_head) # Regularization

# Temperature calibration parameter (τ ∈ (0.5, ∞))

tau_raw = self.temp_head(z_head)

tau = 0.5 + 0.5 * F.softplus(tau_raw) # Lower bound at 0.5

return z_final, z_head, halt_logits, tau, c

def forward(

self,

x: torch.Tensor, # Goal embedding [B, D]

y: torch.Tensor, # Response embedding [B, D]

z: torch.Tensor, # Initial latent state [B, D]

*,

seq_len: Optional[torch.Tensor] = None, # Response length [B] (optional)

return_aux: bool = True, # Whether to return auxiliary outputs

with_consistency_target: bool = True, # Compute consistency regularization

) -> Tuple[torch.Tensor, torch.Tensor, torch.Tensor, Dict[str, Any]]:

"""

Complete forward pass with recursive processing and multi-head prediction.

"""

# Main recursive processing

z = z.clone() # Ensure we don't modify input

z_final, z_head, halt_logits, tau, c = self._recur(x, y, z)

# Core prediction heads

logits = self.classifier(z_head) # [B, vocab_size]

score_logit = self.score_head(z_head) # [B, 1]

log_var = self.logvar_head(z_head) # [B, 1] uncertainty

# ----- NUMERICAL SAFETY -----

LOGVAR_MIN, LOGVAR_MAX = -5.0, 5.0

log_var = log_var.clamp(min=LOGVAR_MIN, max=LOGVAR_MAX)

# Use tau for calibration; keep a stable proxy for telemetry

# NOTE: move temp01 to sigmoid(tau_raw) for cross-model alignment

tau_raw = self.temp_head(z_head)

tau = 0.5 + 0.5 * F.softplus(tau_raw)

tau_safe = torch.clamp(tau, min=1e-2)

s = torch.sigmoid(score_logit / tau_safe)

# ----- Core auxiliaries

aux3_logits = self.aux3_head(z_head)

aux3_probs = F.softmax(aux3_logits, dim=-1)

disagree_logit = self.disagree_head(z_head)

y_recon = self.recon_head(z_head)

ood_logit = self.ood_head(z_head)

# Optional bridge heads

agree01 = torch.sigmoid(self.agree_head(z_head)) if self.enable_agree_head else None

sens01 = torch.sigmoid(self.causal_sens_head(z_head)) if self.enable_causal_sens_head else None

# Consistency target

mask = (torch.rand_like(z_head) < self.consistency_mask_p).float()

z_masked = z_head * (1.0 - mask)

cos_consistency = self._cos01(z_head, z_masked).unsqueeze(-1)

consistency_logit = self.consistency_head(z_head)

# Finite-difference sensitivity

eps = 1e-3

y_eps = y + eps * F.normalize(torch.randn_like(y), dim=-1)

with torch.no_grad():

_, z_head_eps, _, tau_eps, _ = self._recur(x, y_eps, z)

tau_eps_safe = torch.clamp(tau_eps, min=1e-2)

score_eps = torch.sigmoid(self.score_head(z_head_eps) / tau_eps_safe)

jac_fd = ((score_eps - s).abs() / eps).clamp(0, 10.0) / 10.0

# Length effect

if seq_len is not None:

len_effect = torch.tanh((seq_len.float() / self.len_norm_L)).unsqueeze(-1)

else:

len_effect = torch.zeros_like(s)

length_norm01 = (len_effect + 1.0) * 0.5

# ----- Aligned telemetry keys -----

certainty01 = torch.sigmoid(-log_var)

uncertainty01 = 1.0 - certainty01

temp01 = torch.sigmoid(tau_raw) # aligned proxy in [0,1]

ood_hat01 = torch.sigmoid(ood_logit)

halt_prob = torch.sigmoid(halt_logits).unsqueeze(-1) if halt_logits.dim()==1 else torch.sigmoid(halt_logits)

# Device-safe normalized entropy (in [0,1])

logK = torch.log(torch.tensor(3.0, device=z_head.device, dtype=z_head.dtype))

entropy_aux = (-(aux3_probs * F.log_softmax(aux3_logits, -1)).sum(-1) / logK).unsqueeze(-1)

aux: Dict[str, Any] = {

# raw heads you need for training

"score_logit": score_logit,

"log_var": log_var,

"aux3_logits": aux3_logits,

"disagree_logit": disagree_logit,

"y_recon": y_recon,

"consistency_logit": consistency_logit,

"consistency_target": cos_consistency.detach(),

# aligned derived telemetry (all ∈ [0,1])

"score": s,

"certainty01": certainty01,

"uncertainty01": uncertainty01, # < NEW (correct)

"uncertainty": uncertainty01, # < OPTIONAL alias for back-compat

"aux3_probs": aux3_probs,

"entropy_aux": entropy_aux,

"disagree_hat": torch.sigmoid(disagree_logit),

"recon_sim": self._cos01(y_recon, y).unsqueeze(-1),

"consistency_hat": torch.sigmoid(consistency_logit),

"concept_sparsity": (c > 0).float().mean(dim=-1, keepdim=True),

"ood_hat01": ood_hat01, # < NEW aligned name

"temp01": temp01, # < changed to sigmoid(tau_raw)

"jacobian_fd": jac_fd,

"len_effect": len_effect,

"length_norm01": length_norm01, # < NEW 0..1 length proxy

"halt_prob": halt_prob, # < NEW

}

if agree01 is not None:

aux["agree01"] = agree01

if sens01 is not None:

aux["sens01"] = sens01

return logits, halt_logits.squeeze(-1), z_final, (aux if return_aux else {})

🔁 Training the Tiny Recursion Model: Building the Cognitive Microscope

“Tiny isn’t just a smaller HRM it’s a specialized diagnostic tool trained to spot exactly where HRM’s strengths and weaknesses live.”

Now that we’ve built the Tiny Recursion Model architecture, let’s explore how we train it to become Stephanie’s cognitive microscope. This is where the magic happens: transforming a simple neural architecture into a system that can diagnose reasoning quality with surgical precision.

🌬️ The Training Pipeline: From Raw Chats to Diagnostic Signals

Tiny’s training pipeline is designed to be:

- Per-dimension: Each of the five reasoning dimensions (reasoning, knowledge, clarity, faithfulness, coverage) gets its own trained model

- Data-driven: Trained on high-quality conversation turns annotated by our ChatAnalyze Agent

- Diagnostic-focused: Trained to predict not just scores, but uncertainty, agreement with HRM, sensitivity to perturbations, and other diagnostic signals

Let’s walk through exactly how this works:

1. Data Preparation: Quality Chats for Quality Training

# stephanie/agents/maintenance/tiny_trainer.py

class TinyTrainerAgent(BaseAgent):

def __init__(self, cfg, memory, container, logger, full_cfg):

super().__init__(cfg, memory, container, logger)

self.dimensions = cfg.get("dimensions", []) # e.g., ["reasoning", "knowledge", "clarity", ...]

self.trainer = TinyTrainer(full_cfg.scorer.hrm, memory, container=container, logger=logger)

async def run(self, context: dict) -> dict:

results = {}

for dimension in self.dimensions:

pairs_by_dim = self.pair_builder.get_training_pairs_by_dimension(dimension=dimension)

samples = pairs_by_dim.get(dimension, [])

if not samples:

self.logger.log("NoSamplesFound", {"dimension": dimension})

continue

stats = self.trainer.train(samples, dimension)

if "error" not in stats:

results[dimension] = {"count": len(samples), **stats}

context["training_stats"] = results

return context

This agent is the orchestrator of Tiny’s training journey. It:

- Iterates through each reasoning dimension

- Fetches training pairs specific to that dimension

- Trains a separate Tiny model for each dimension

- Logs detailed statistics for each training run

The key insight here: Tiny is trained per-dimension. This isn’t arbitrary it’s because each dimension of reasoning requires different diagnostic signals. A model trained to assess knowledge won’t be as good at assessing clarity, and vice versa.

2. Training Configuration: Precision Tuning for Recursive Reasoning

# stephanie/scoring/training/tiny_recursion_trainer.py

class TinyRecursionTrainer(BaseTrainer):

def __init__(self, cfg, memory, container, logger):

super().__init__(cfg, memory, container, logger)

# - Identity / paths -

self.model_type = "tiny"

self.target_type = cfg.get("target_type", "document")

self.version = cfg.get("model_version", "v1")

# - Core knobs -

self.epochs = int(cfg.get("epochs", 20))

self.lr = float(cfg.get("lr", 3e-5)) # conservative default

self.batch_size = int(cfg.get("batch_size", 16))

self.dropout = float(cfg.get("dropout", 0.1))

self.use_attention = bool(cfg.get("use_attention", False))

self.n_recursions = int(cfg.get("n_recursions", 6))

This configuration is where Tiny’s “personality” is set. Let’s unpack the critical choices:

-

lr = 3e-5: A conservative learning rate that prevents the model from overshooting during training. Tiny’s recursive nature means small updates can have significant downstream effects. -

n_recursions = 6: The number of refinement steps Tiny takes during evaluation. This was carefully tuned to balance computational efficiency with reasoning depth fewer steps would be too shallow, more steps would be computationally expensive. -

dropout = 0.1: A modest dropout rate that prevents overfitting while preserving the model’s ability to capture subtle reasoning patterns. -

batch_size = 16: A small batch size that helps stabilize training for Tiny’s recursive architecture.

These settings aren’t arbitrary they’re the result of extensive experimentation to find the sweet spot where Tiny can learn diagnostic signals without overfitting or becoming computationally expensive.

3. Training Loop: The Recursive Refinement Process

# stephanie/scoring/training/tiny_recursion_trainer.py

def train(self, samples, dimension):

# ... data preparation ...

for epoch in range(self.epochs):

avg_loss = self._train_epoch(model, dataloader, epoch_idx=epoch)

# ... validation ...

if avg_loss < best_loss - 1e-4:

best_loss = avg_loss

wait = 0

else:

wait += 1

if wait >= patience:

break

# ... save model and metadata ...

This training loop is where the magic happens. Tiny doesn’t just learn to predict scores it learns to predict everything that matters for reasoning quality:

- Core score prediction: The primary quality score (0-100)

- Heteroscedastic uncertainty: How confident the model is in its own score

- Agreement with HRM: Whether Tiny thinks its score aligns with HRM’s

- Sensitivity to perturbations: How much Tiny’s score changes with small input changes

- OOD detection: Whether the input is out-of-distribution

The “wait” mechanism is particularly important it implements early stopping when Tiny stops improving, preventing overfitting and saving computational resources.

4. Specialized Heads: The Diagnostic Toolkit

# stephanie/models/tiny_recursion.py

class TinyRecursionModel(nn.Module):

def __init__(...):

# ... existing init ...

# ✨ TINY+ AUX HEADS

self.score_head = nn.Linear(d_model, 1) # final calibrated score

self.logvar_head = nn.Linear(d_model, 1) # heteroscedastic uncertainty

self.aux3_head = nn.Linear(d_model, 3) # bad/mid/good classifier

self.disagree_head = nn.Linear(d_model, 1)

self.causal_sens_head = nn.Linear(d_model, 1)

# ... and more ...

This is where Tiny becomes more than just a scorer it becomes a diagnostic toolkit. Each head serves a specific purpose:

score_head: The primary quality score that becomes ourscm.<dim>.score01in the SCM protocollogvar_head: Measures aleatoric uncertainty (how noisy the data is)disagree_head: Predicts whether Tiny’s score will disagree with HRM’scausal_sens_head: Measures how sensitive Tiny’s score is to tiny input changesood_head: Flags out-of-distribution inputs that might be risky

These heads are trained together, allowing Tiny to learn the relationships between different diagnostic signals. For example, when Tiny is uncertain (logvar_head high), it’s also more likely to disagree with HRM (disagree_head high).

5. Training Losses: The Mathematical Foundation

Tiny’s training doesn’t just optimize for one thing it optimizes for multiple signals simultaneously:

# stephanie/scoring/training/tiny_recursion_trainer.py

def _train_epoch(self, model, dataloader, epoch_idx):

# ... training loop ...

loss = self.w_score * score_loss + \

self.w_uncertainty * uncertainty_loss + \

self.w_disagree * disagree_loss + \

self.w_sens * sensitivity_loss + \

self.w_ood * ood_loss

# ... backpropagation ...

Each loss component has its own weight:

w_score: Primary quality score prediction (most important)w_uncertainty: Aleatoric uncertainty predictionw_disagree: Agreement with HRM predictionw_sens: Sensitivity to input perturbationsw_ood: Out-of-distribution detection

These weights are carefully tuned to balance the different aspects of reasoning quality. For example, w_score is typically higher than w_ood because the primary job is to assess quality, with OOD detection being a secondary concern.

🔗 Working together towards a goal

The real magic of Tiny’s training isn’t in the individual components it’s in how they work together:

- Per-dimension training: Each dimension gets its own specialized model that understands the unique characteristics of that reasoning aspect

- Multi-task learning: Tiny learns to predict multiple diagnostic signals simultaneously, creating a rich diagnostic profile

- Shared canonical metrics: All outputs are mapped to a standardized format (SCM) that aligns with HRM’s outputs

- Diagnostic-focused: Instead of just predicting scores, Tiny learns to predict why a score is what it is

This training approach is what transforms Tiny from a simple scorer into Stephanie’s cognitive microscope a system that can diagnose reasoning quality with surgical precision and tell us exactly where and why it agrees or disagrees with HRM.

Tiny Recursion Trainer: View full source

# stephanie/scoring/training/tiny_recursion_trainer.py

"""

TinyRecursionModel Trainer (Tiny+)

A specialized trainer for the TinyRecursionModel that implements multi-objective

training with heteroscedastic regression and auxiliary losses. This trainer handles

multiple data schemas and produces dimension-specific models with comprehensive

training telemetry.

Key Features:

- Heteroscedastic regression for score prediction with uncertainty estimation

- Multiple auxiliary objectives: bucket classification, disagreement, reconstruction

- Support for various input schemas (native, singleton, pairwise, HRM)

- Comprehensive training monitoring and validation

- Early stopping and model checkpointing

"""

from __future__ import annotations

import math

import os

from collections import Counter

from datetime import datetime

from typing import Any, Dict, List, Optional, Tuple

import logging

import torch

import torch.nn.functional as F

from torch import optim

from torch.utils.data import DataLoader, TensorDataset

from stephanie.scoring.model.tiny_recursion import TinyRecursionModel

from stephanie.scoring.training.base_trainer import BaseTrainer

try:

from tqdm.auto import tqdm

except Exception: # pragma: no cover

tqdm = None

_logger = logging.getLogger(__name__)

def _bucket3(y01: torch.Tensor) -> torch.Tensor:

"""

Convert continuous scores to 3-class bucket labels.

Args:

y01: Tensor of scores in range [0, 1]

Returns:

Long tensor with bucket indices:

- 0: scores < 1/3

- 1: scores in [1/3, 2/3)

- 2: scores >= 2/3

"""

# <1/3 => 0, [1/3,2/3) => 1, >=2/3 => 2

edges = torch.tensor([1/3, 2/3], device=y01.device, dtype=y01.dtype)

return (y01 >= edges[0]).long() + (y01 >= edges[1]).long()

class TinyTrainer(BaseTrainer):

"""

Trainer for TinyRecursionModel (Tiny+) with multi-objective optimization.

This trainer implements a comprehensive training regimen that combines:

- Main heteroscedastic regression objective

- Multiple auxiliary objectives for regularization and feature learning

- Support for various input data formats And see this was a complete waste of timed

- Extensive monitoring and validation

The model produces separate instances for each quality dimension.

Attributes:

model_type: Identifier for model architecture ("tiny")

target_type: Type of scoring target ("document", "sentence", etc.)

version: Model version identifier

epochs: Number of training epochs

lr: Learning rate for optimizer

batch_size: Training batch size

dropout: Dropout rate for model regularization

use_attention: Whether to use attention mechanisms

n_recursions: Number of recursion steps in model

halt_lambda: Weight for halting regularization loss

grad_clip: Gradient clipping value

w_aux3: Weight for 3-class auxiliary classification

w_disagree: Weight for disagreement prediction

w_recon: Weight for reconstruction loss

w_cons: Weight for consistency regularization

w_sae_recon: Weight for sparse autoencoder reconstruction

w_ood: Weight for out-of-distribution detection

"""

def __init__(self, cfg, memory, container, logger):

"""Initialize TinyTrainer with configuration and dependencies."""

super().__init__(cfg, memory, container, logger)

# --- Identity / paths -------------------------------------------------

self.model_type = "tiny"

self.target_type = cfg.get("target_type", "document")

self.version = cfg.get("model_version", "v1")

# --- Core knobs -------------------------------------------------------

self.epochs = int(cfg.get("epochs", 20))

self.lr = float(cfg.get("lr", 3e-5)) # conservative default

self.batch_size = int(cfg.get("batch_size", 16))

self.dropout = float(cfg.get("dropout", 0.1))

self.use_attention = bool(cfg.get("use_attention", False))

self.n_recursions = int(cfg.get("n_recursions", 6))

self.halt_lambda = float(cfg.get("halt_lambda", 0.05)) # halting is a light regularizer

self.grad_clip = float(cfg.get("grad_clip", 0.5))

# Aux loss weights

self.w_aux3 = float(cfg.get("w_aux3", 0.3))

self.w_disagree = float(cfg.get("w_disagree", 0.3))

self.w_recon = float(cfg.get("w_recon", 0.2))

self.w_cons = float(cfg.get("w_consistency", 0.2))

self.w_sae_recon = float(cfg.get("w_sae_recon", 0.0)) # 0 = off by default

self.w_ood = float(cfg.get("w_ood", 0.0)) # 0 = off by default

# --- Telemetry --------------------------------------------------------

self.show_progress = bool(cfg.get("show_progress", True))

self.progress_every = max(1, int(cfg.get("progress_every", 500)))

self.log_every_steps = max(1, int(cfg.get("log_every_steps", 50)))

self.label_hist_bucket = int(cfg.get("label_hist_bucket", 10))

self.log_label_histogram = bool(cfg.get("log_label_histogram", True))

# --- Validation / reproducibility ------------------------------------

self.validation_ratio = float(cfg.get("validation_ratio", 0.1))

self.seed = int(cfg.get("seed", 42))

torch.manual_seed(self.seed)

if torch.cuda.is_available():

torch.cuda.manual_seed(self.seed)

# --- Model ------------------------------------------------------------

self.model = TinyRecursionModel(

d_model=self.dim,

n_layers=int(cfg.get("n_layers", 2)),

n_recursions=self.n_recursions,